Recently I was conversing with someone about my PowerShell code that downloads tools from the live Sysinternals site. If you search the Internet, you'll find plenty of ways to achieve the same goal. But we were running into a problem where PowerShell was failing to get information from the site. From my testing and research I'm guessing there was a timing issue when the site is too busy. So I started playing around with some alternatives.

Recently I was conversing with someone about my PowerShell code that downloads tools from the live Sysinternals site. If you search the Internet, you'll find plenty of ways to achieve the same goal. But we were running into a problem where PowerShell was failing to get information from the site. From my testing and research I'm guessing there was a timing issue when the site is too busy. So I started playing around with some alternatives.

ManageEngine ADManager Plus - Download Free Trial

Exclusive offer on ADManager Plus for US and UK regions. Claim now!

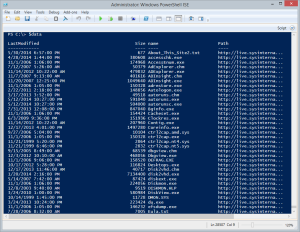

I knew that I could easily get the html content from http://live.sysinternals.com through a few different commands. I chose to use Invoke-RestMethod for the sake of simplicity.

[string]$html = Invoke-RestMethod "http://live.sysinternals.com"

Because $html is one long string with predictable patterns, I realized I could use my script to convert text to objects using named regular expression patterns. In my test script, I dot source this script.

#need this function . C:\scripts\convertfrom-text.ps1

Now for the fun part. I had to build a regular expression pattern. Eventually I arrived at this:

$pattern = "(?<date>\w+,\s\w+\s\d{1,2},\s\d{4}\s+\d{1,2}:\d{2}\s+\w{2})\s+(?<size>\d+)\s+<A HREF=""/(?<name>\w+(\.\w+){1,2})"

If you have used the Sysinternals site, you'll know there is also a Tools "subfolder" that appears to be essentially the same as the top level site. My pattern is for the top level site. Armed with this pattern, it wasn't difficult to create an array of objects for each tool.

$data = $html | ConvertFrom-Text $pattern |

Select @{Name="LastModified";Expression={$_.Date -as [DateTime]}},

@{Name="Size";Expression={$_.Size -as [int]}},Name,

@{Name="Path";Expression={ "http://live.sysinternals.com/$($_.name)"}}

Next, I check my local directory and get the most recent file.

#get most recently modified local file $localfiles = dir G:\Sysinternals $lastLocal = ( $localfiles | sort LastWriteTime | select -last 1).LastWriteTime

Then I can test for files that don't exist locally or are newer on the site.

#find files that have a newer time stamp or files that exist remotely but not locally

$needed = $data | where { ($_.LastModified -gt $lastlocal) -OR ( $localfiles.name -notcontains $_.name ) }

If there are needed files, then I create a System.Net.WebClient object and download the files.

if ($needed) {

Write-Host "Getting $($needed.count) updated files" -ForegroundColor Cyan

$needed | foreach -begin { $wc = New-Object System.Net.WebClient

} -process {

$target = Join-Path -Path "G:\Sysinternals" -ChildPath $_.name

$source = $_.Path

Write-host "Updating $target from $source" -ForegroundColor Green

$wc.DownloadFile($source,$target)

}

}

else {

Write-Host "No updated files detected" -ForegroundColor Cyan

}

The end result is that I can update my local Sysinternals folder very quickly and not worry about timing problems using the Webclient service.