Over the years I've come up with a number of PowerShell tools to download the SysInternals tools to my desktop. And yes, I know that with PowerShell 5 and PowerShellGet I could download and install a SysInternals package. But that assumes the package is current. But that's not really the point. Instead I want to use today's Friday Fun to offer you an example of using a workflow as a scripting tool. In this case, the goal is to download the SysInternals files from the Internet.

ManageEngine ADManager Plus - Download Free Trial

Exclusive offer on ADManager Plus for US and UK regions. Claim now!

First, you'll need to get a copy of the workflow from GitHub.

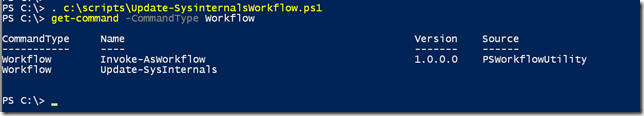

A workflow command is like a function, in that you need to load it into your PowerShell session such as dot sourcing the file.

. c:\scripts\Update-SysinternalsWorkflow.ps1

This will give you a new command.

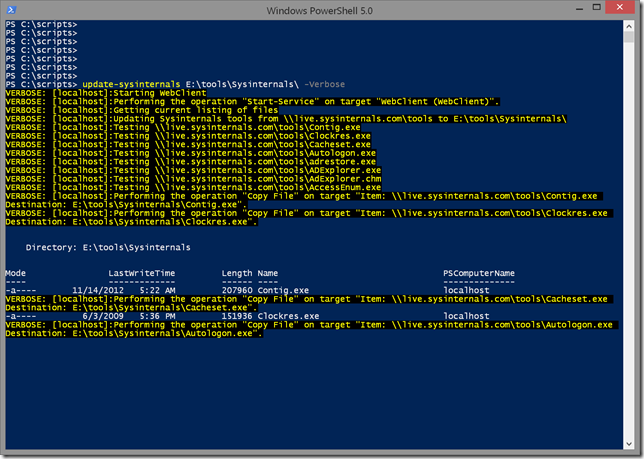

The workflow can now be executed like any other command.

The workflow's main advantage is that it can process items in parallel and you can throttle the activity. In my workflow, I am processing 8 files at once.

Sequence {

Write-Verbose "Updating Sysinternals tools from \\live.sysinternals.com\tools to $destination"

#download files in parallel groups of 8

foreach -parallel -throttle 8 ($file in $current) {

#construct a path to the live web version and compare dates

$online = Join-Path -path \\live.sysinternals.com\tools -ChildPath $file.name

Write-Verbose "Testing $online"

if ((Get-Item -Path $online).LastWriteTime.Date -ge $file.LastWriteTime.Date) {

Copy-Item $online -Destination $Destination -PassThru

}

}

}

One thing to be careful of in a workflow is scope. You shouldn't assume that variables can be accessed across the entire workflow. That's why I am specifically scoping some variables so that they will persist across sequences.

I really hope that one day the parallel processing will make its way into the language because frankly, that is the only reason I am using a workflow. And it's quick. I downloaded the entire directory in little over a minute on my FiOS connection. The workflow will also only download files that are either newer online or not in the specified directory.

If you are looking to learn more about workflows, there is material in PowerShell in Depth.

I hope you find this useful. Consider it my Valentine to you.

NOTE: Because the script is on GitHub, it will always be the latest version, including what you see embedded in this post. Since this article was posted I have made a few changes which may not always be reflected in this article.

Thanks, this will be handy.

Two comments:

1. On my system I have to run this elevated otherwise the Start-Service WebClient will fail.

2. I needed to add -Name to the Start-Service and Stop-Service and -FilterScript to the Where-Object or it will complain that it cannot determine the parameter set. From what I understand this is a workflow limitation.

After that it worked great.

3.

The first version of PowerShell workflow required full parameter names, but I don’t think that is a requirement in later versions of PowerShell. But I’m glad you worked around it. And a good point about the need for an elevated session.

The version in GitHub has been updated since I first wrote this article. I added some much needed error handling and revised (and tested) so that you could use it to remotely download tools to another desktop.

This version is set to require PowerShell 4 since I don’t have a v3 client to test with anymore and I’m using the new Where() method in v4. I also made a few changes to try and make this even more efficient.

One final note, if you are running PowerShell 5 you can’t run a workflow against downlevel versions. 5.0 added a new common parameter which the workflow tries to use ultimately failing on non-v5 platforms.