If you follow me on Twitter, you know that I have a monthly tweet reminder about running and testing backups. I have to say that the concept of a backup is different today than it was when I started in IT. Now we have cheap disk storage and cloud services. In some ways, our data is constantly being backed up. This is true even for me. I use a number of cloud services to save and synchronize data across multiple devices. For the longest time, I didn't think about any sort of formal backup strategy. But perhaps I'm getting a bit more cautious in my old age. Or maybe paranoid. Lately, I've started worrying about something happening locally that commits bad data to to my cloud services. It doesn't really matter what causes the problem only that I might end up synching corrupted or bad data to the cloud. I need to be better prepared. Because I'm a PowerShell guy, I need a PowerShell backup system.

ManageEngine ADManager Plus - Download Free Trial

Exclusive offer on ADManager Plus for US and UK regions. Claim now!

I'd like to think I take reasonable precautions. I run ESET Internet Security on my systems. I visit dubious web sites. I keep up to date with patches and updates. I've even setup backup of key folders using Windows 10 tools. However, I like having options. Over the last week of so I've been building a PowerShell-based backup solution. Perhaps this isn't something you need, but you might find some of the techniques I'm using helpful in your own related work.

Baseline Backups

My backup approach follows a traditional model of a full backups followed by daily incremental backups. I have a Synology NAS device on my local network that I already use as a backup source. I created archive files of key folders using a naming convention of YearMonthDay_identifier_FULL. It doesn't really matter what tool you use to create the archive. I use WinRar so I end up with a file like 20191101_scripts-FULL.rar. I started out trying to use Compress-Archive but it has a known limitation when it comes to hidden files and folders. And since I wanted to backup my git projects as well, I had to turn to something else. I suppose I could skip these items since I push to GitHub. But not every local project is on GitHub. Regardless, I may have other work to backup that might include a hidden file so Compress-Archive is not an option. In a future post, I'll share the PowerShell code I'm using to run WinRar.

Watching For Files

The major step in my backup process is deciding what files to backup incrementally. For that I decided to turn to the .NET System.IO.FileSystemWatcher and an event subscription. Here's a sample of how this works. First, I create an instance of the FileSystemWatcher.

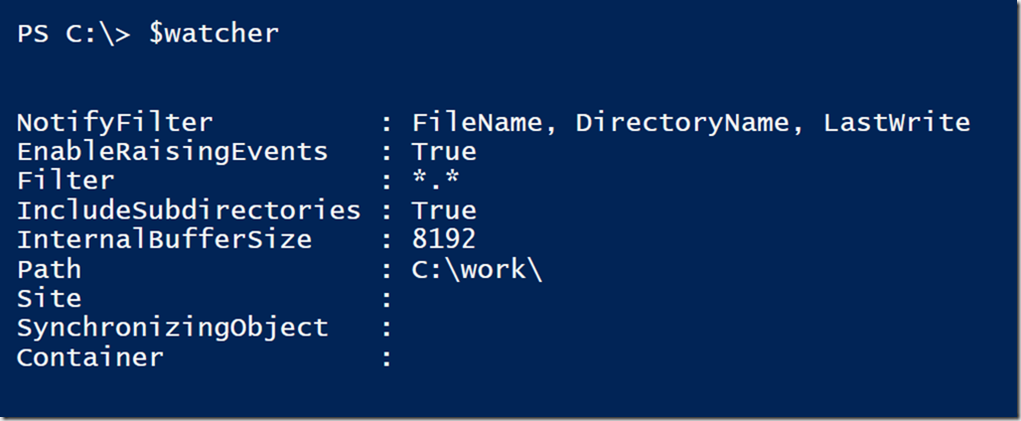

$path="C:\work\" #define the watcher object $watcher=[System.IO.FileSystemWatcher]($path) $watcher.IncludeSubdirectories = $True #enable the watcher $watcher.EnableRaisingEvents=$True

Be sure to enable the watcher to watch for subfolders. I missed that the first time and couldn't figure out why not all my changes were being detected.

You can see that by default this will watch for all files (*.*) which is fine for my purposes. Eventually you'll see how I perform additional filtering. Next I create the event subscriber using Register-ObjectEvent.

$params = @{

InputObject = $watcher

Eventname = "Changed"

SourceIdentifier = "WorkChange"

MessageData = "A file was created or changed"

}

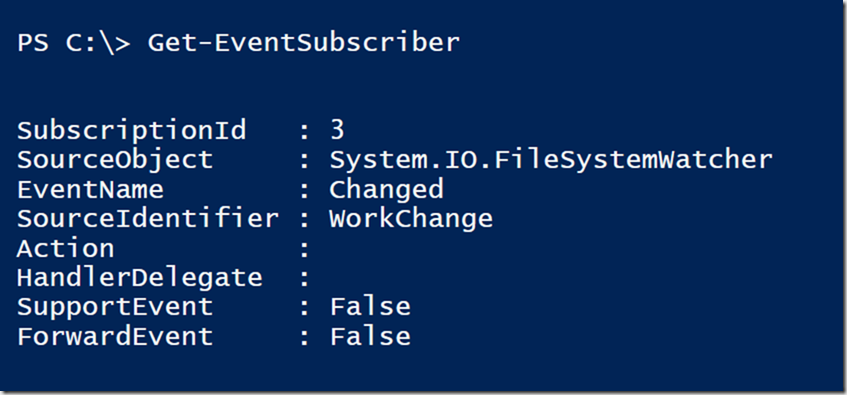

Register-ObjectEvent @params

The possible options for EventName when using the FileSystemWatcher are Changed,Created, and Deleted. Technically, there is a Renamed event, but I've never worked with that. Use the Get-EventSubscriber cmdlet to view the subscription.

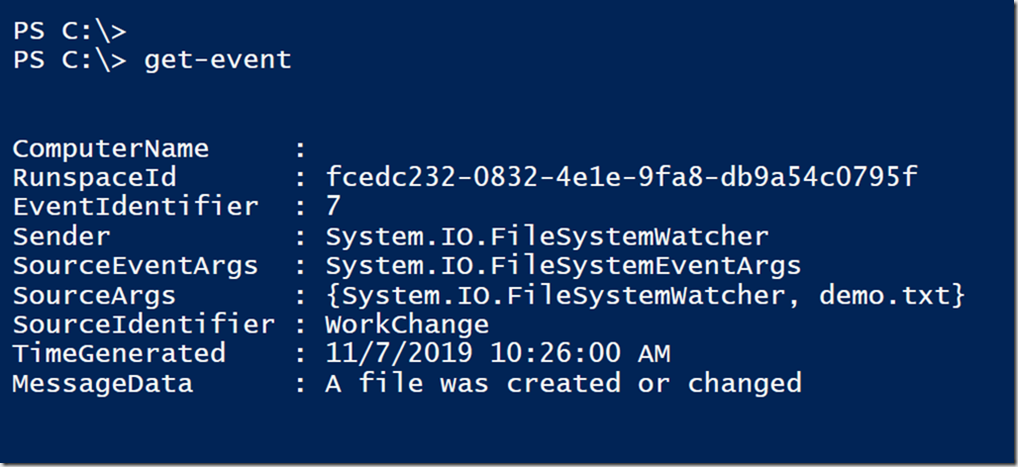

When I created this subscription, every time a file is changed (or created) in C:\Work, I'll get an event. You can see these events with Get-Event.

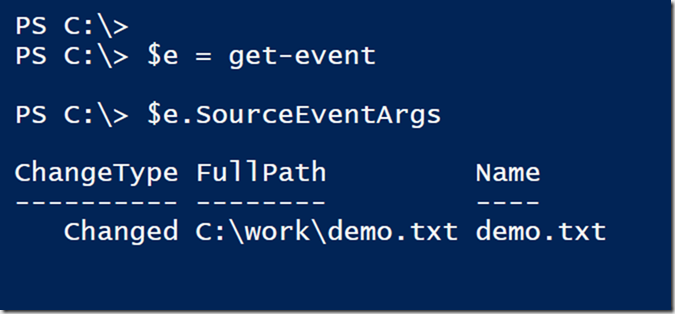

Information about the file is stored in the SourceEventArgs property.

If I needed to watch C:\Work for backup, I would know to include this file.

Getting Results

As long as this PowerShell session is running the event subscriber will capture changes. Later in the day, I can get a list of all files ready to be backed up.

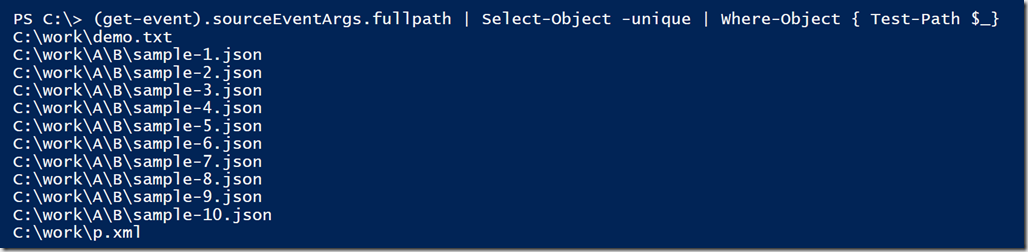

(Get-Event).sourceEventArgs.fullpath | Select-Object -unique | Where-Object { Test-Path $_}

I'm filtering for unique paths because sometimes a file change might fire multiple events for the same file. Or I might change a file several times throughout the day. I might also delete a file which is why I'm testing the path.

I can funnel these files to my backup routine to create an incremental archive.

Next Steps

But there are limitations with what I've demonstrated thus far. If I close PowerShell, I lose the event subscriber. By the way, you can use Unregister-Event to manually remove the event subscriber. I also have multiple folders I want to monitor. Next time, I'll show you how I setup a PowerShell scheduled job to handle my daily monitoring needs.

3 thoughts on “Creating a PowerShell Backup System”

Comments are closed.