When it comes to PowerShell scripting we tend to focus a lot on functions and modules. We place an emphasis on building re-usable tools. The idea is that we can then use these tools at a PowerShell prompt to achieve a given task. More than likely, these tasks are repetitive. In these situations, it makes sense to take the commands that you would normally type interactively at a PowerShell prompt and put them into a PowerShell controller script. This script in turn uses the functions and modules that you've built. I've been building a set of PowerShell tools for backing up important folders. I've also created a few management controller scripts I want to share with you.

ManageEngine ADManager Plus - Download Free Trial

Exclusive offer on ADManager Plus for US and UK regions. Claim now!

Designing PowerShell Scripts

The basic idea with a PowerShell script is that it is a "canned" PowerShell session. You should be able to take the contents of a ps1 file, paste it into a PowerShell session, and it should run. Instead of typing the commands interactively, the script does it for you. However, PowerShell scripts can also take parameters, use cmdlet binding, and take advantage of scripting constructs like If/Else statements. PowerShell scripts don't have to follow the same rules we stress when building functions. The script makes it easy to run a series of commands that controls the functions, or cmdlets. Here's an example.

#requires -version 5.1

[cmdletbinding()]

Param(

[parameter(position = 0)]

[ValidateNotNullOrEmpty()]

[string]$Backup = "*",

[Parameter(HelpMessage = "Show raw objects, not a formatted report.")]

[switch]$Raw

)

$f = Get-ChildItem -path "D:\backup\$backup-log.csv" | ForEach-Object {

$p = $_.fullname

Import-Csv $_.fullname | Add-Member -MemberType NoteProperty -Name Log -value $p -PassThru

} | Where-Object { ([int32]$_.Size -gt 0) -AND ($_.IsFolder -eq 'False') }

$files = ($f.name | Select-Object -Unique).Foreach({ $n = $_; $f.where({ $_.name -eq $n }) |

Sort-Object -Property { $_.date -as [datetime] } | Select-Object -last 1 })

if ($raw) {

$files

}

else {

$grouped = $files | Group-Object Log

#display formatted results

$files | Sort-Object -Property Log, Directory, Name | Format-Table -GroupBy log -Property Date, Name, Size, Directory

$summary = foreach ($item in $grouped) {

[PSCustomObject]@{

Backup = (Get-Item $item.Name).basename.split("-")[0]

Files = $item.Count

Size = ($item.group | Measure-Object -Property size -sum).sum

}

} #foreach item

$total = [PSCustomObject]@{

TotalFiles = ($grouped | Measure-Object -property count -sum).sum

TotalSizeMB = [math]::round(($summary.size | Measure-Object -sum).sum/1MB,4)

}

Write-Host "Incremental Pending Backup Summary" -ForegroundColor cyan

($summary | Format-Table | Out-String).TrimEnd() | Write-Host -ForegroundColor cyan

$total | Format-Table | Out-String | Write-Host -ForegroundColor cyan

}

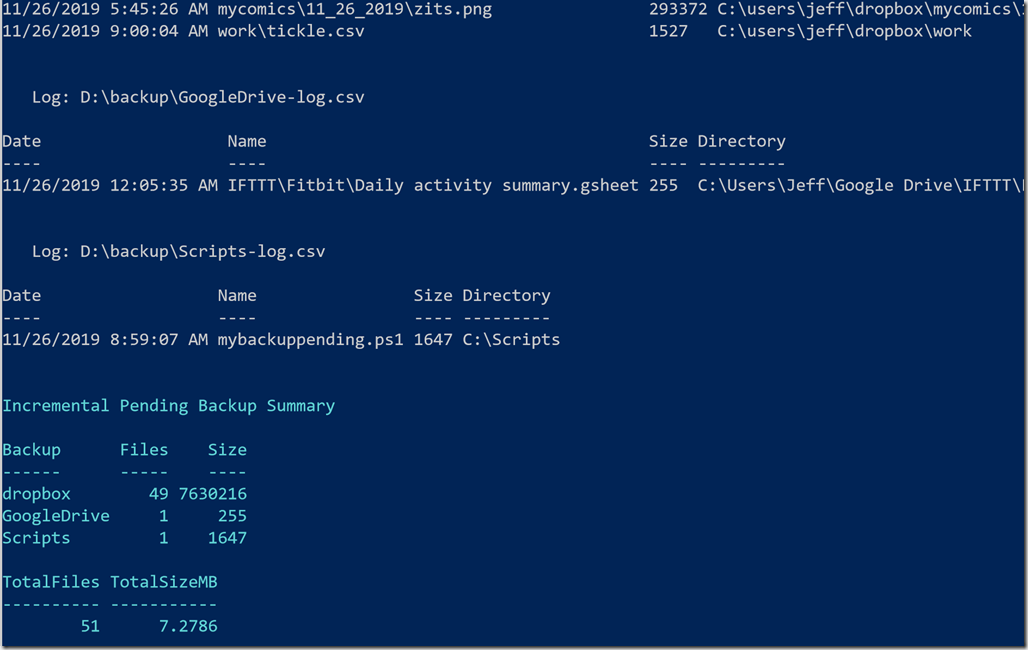

This script, MyBackupPending.ps1, is designed to show me what files will be backed up as part of my daily incremental backup job. The script file goes through my list of backup csv files and by default displays a formatted report.

Normally, you wouldn't use Format-Table or Write-Host in a PowerShell function. But this control script isn't designed to write objects to the pipeline. It is designed to give me a formatted report. However, that doesn't mean I can't have things both ways.

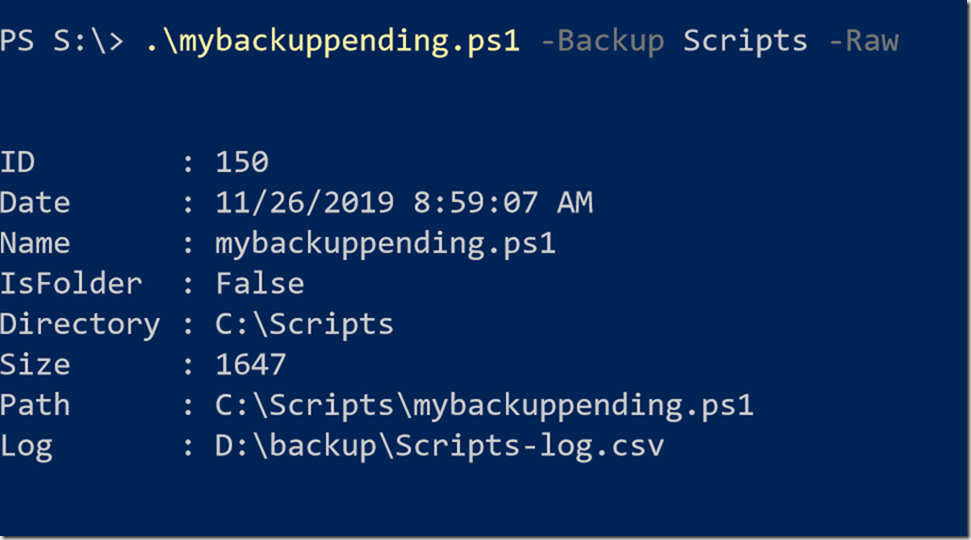

Scripts can have parameters. Mine includes parameters to indicate the backup set or an option to display raw, unformatted data.

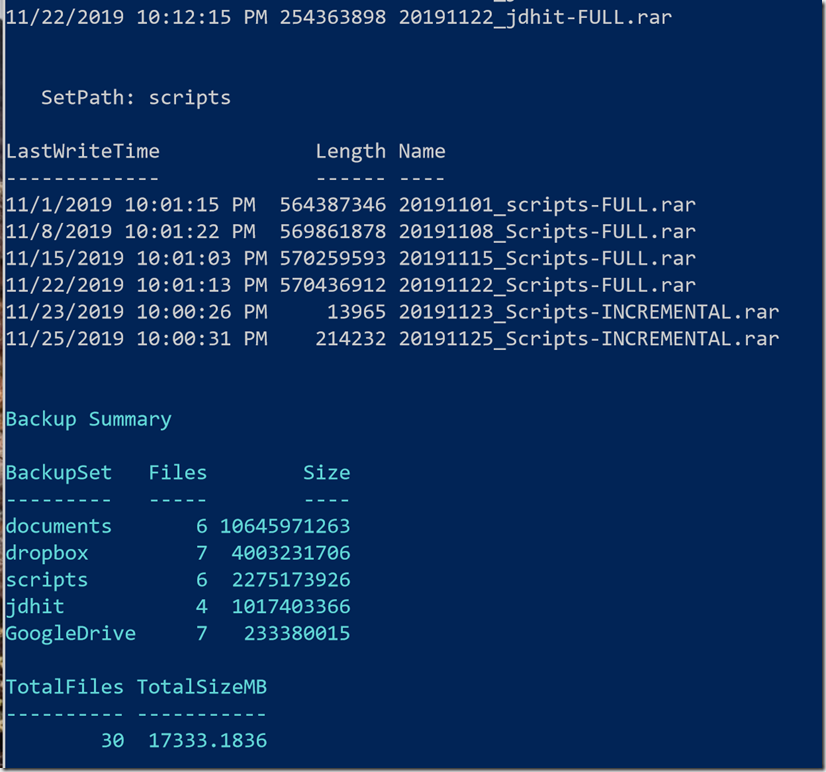

You can use the same scripting techniques in your scripts that you do in your functions. Here is my control script that gives me a report on the backup archives.

# requires -version 5.1

[cmdletbinding()]

Param(

[Parameter(Position = 0, HelpMessage = "Enter the path with extension of the backup files.")]

[ValidateNotNullOrEmpty()]

[ValidateScript( { Test-Path $_ })]

[string]$Path = "\\ds416\backup",

[Parameter(HelpMessage = "Get backup files only with no formatted output.")]

[Switch]$Raw

)

<#

A regular expression pattern to match on backup file name with named captures

to be used in adding some custom properties. My backup names are like:

20191101_Scripts-FULL.rar

20191107_Scripts-INCREMENTAL.rar

#>

[regex]$rx = "^20\d{6}_(?<set>\w+)-(?<type>\w+)\.rar$"

<#

I am doing so 'pre-filtering' on the file extension and then using the regular

expression filter to fine tune the results

#>

$files = Get-ChildItem -path $Path -filter *.rar | Where-Object { $rx.IsMatch($_.name) }

#add some custom properties to be used with formatted results based on named captures

foreach ($item in $files) {

$setpath = $rx.matches($item.name).groups[1].value

$settype = $rx.matches($item.name).groups[2].value

$item | Add-Member -MemberType NoteProperty -Name SetPath -Value $setpath

$item | Add-Member -MemberType NoteProperty -Name SetType -Value $setType

}

if ($raw) {

$Files

}

else {

$files | Sort-Object SetPath, SetType, LastWriteTime | Format-Table -GroupBy SetPath -Property LastWriteTime, Length, Name

$grouped = $files | Group-Object SetPath

$summary = foreach ($item in $grouped) {

[pscustomobject]@{

BackupSet = $item.name

Files = $item.Count

Size = ($item.group | Measure-Object -Property size -sum).sum

}

}

$total = [PSCustomObject]@{

TotalFiles = ($grouped | Measure-Object -property count -sum).sum

TotalSizeMB = [math]::round(($summary.size | Measure-Object -sum).sum/1MB,4)

}

Write-Host "Backup Summary" -ForegroundColor cyan

($summary | sort-object Size -Descending| Format-Table | Out-String).TrimEnd() | Write-Host -ForegroundColor cyan

$total | Format-Table | Out-String | Write-Host -ForegroundColor cyan

}

You'll notice that the parameters have attributes and validation. But now I have a very easy command to run that displays my backup files formatted and ordered, including a summary.

Run PowerShell Scripts from Anywhere

One drawback to PowerShell control scripts is that you have to specify the full path to the ps1 file. If your scripts are in a simple path like C:\Scripts, perhaps that isn't that much more to type. But I'm always looking for ways to be "creatively lazy". Here's one approach you might like.

PowerShellGet, which makes it easy to find and install modules from the PowerShell Gallery, also has a set of related commands to find and install PowerShell scripts. You can install a PowerShell script file from the PowerShell gallery and run the .ps1 file from anywhere. You don't need to specify the path. i want that!

I'm going to take the easy route and use the location that PowerShell already knows about: $env:ProgramFiles\WindowsPowerShell\Scripts. If you copy a ps1 file to this folder, you should be able to run it by simply typing the script name. This is perfect. Almost.

I like to keep all my scripting work in C:\Scripts and I knew I'd be tweaking my backup control scripts for awhile. I didn't want to have to deal with editing the file and keeping the version in Installed Scripts in synch. So I linked them. I took advantage of symbolic links to create a linked copy in Installed Scripts. Now, I can run the controller script by name alone, and all I need to do is edit the file under C:\Scripts.

To simplify the process, naturally I created a controller script.

#requires -version 5.1

#create linked script copies in Installed Scripts

[cmdletbinding(SupportsShouldProcess)]

Param(

[Parameter(Position = 0, Mandatory, ValueFromPipeline, ValueFromPipelineByPropertyName)]

[ValidateScript({Test-Path $_})]

[String[]]$Path

)

Begin {

$installPath = "$env:ProgramFiles\WindowsPowerShell\Scripts"

Write-Verbose "Creating links to $installpath"

}

Process {

if (Test-Path -Path $installPath) {

foreach ($file in $Path) {

Try {

$cfile = Convert-Path $File -ErrorAction Stop

}

Catch {

Throw "Failed to find or convert $file"

}

if ($cfile) {

$name = Split-Path -Path $cfile -Leaf

$target = Join-Path -path $installPath -ChildPath $name

Write-Verbose "Creating a link from $cfile to $target. A 0 byte file is normal."

#overwrite the target if it already exists

New-Item -Path $target -value $cfile -ItemType SymbolicLink -Force

}

} #foreach file

}

else {

Write-Warning "Can't find $installPath. This script might require a Windows platform."

}

}

End {

Write-Verbose "Finished linking script files."

}

# Get-command -CommandType ExternalScript

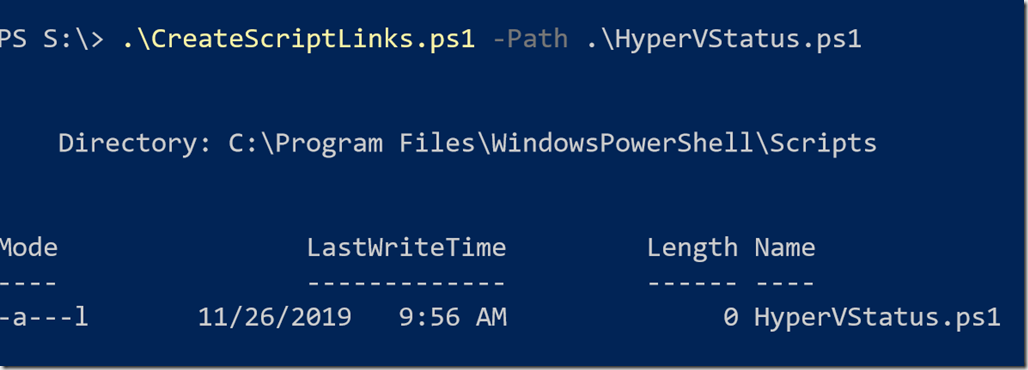

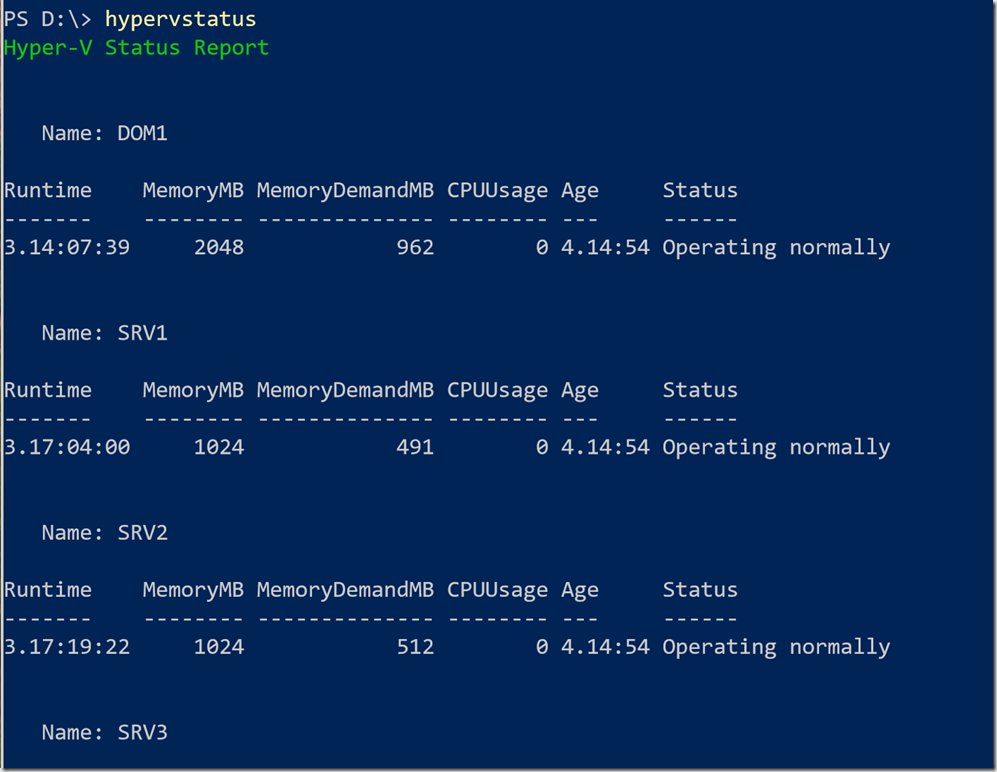

I have a script file I run to show me a custom view of running Hyper-V virtual machines, C:\Scripts\HyperVStatus.ps1. I'll create a link to Installed Scripts.

As you can see this creates a 0 byte link back to the target script. Now I can run this script file from anywhere.

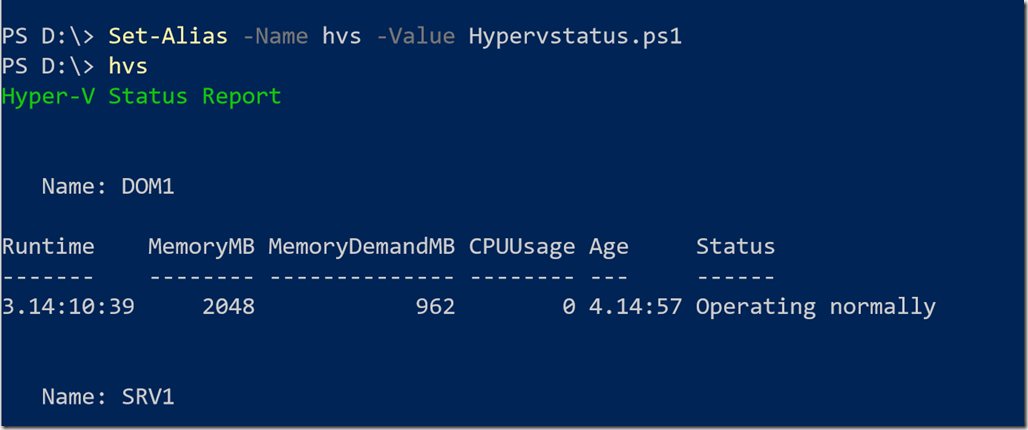

If I update the script, I don't have to do anything to the link. In fact, I can go even further and define an alias.

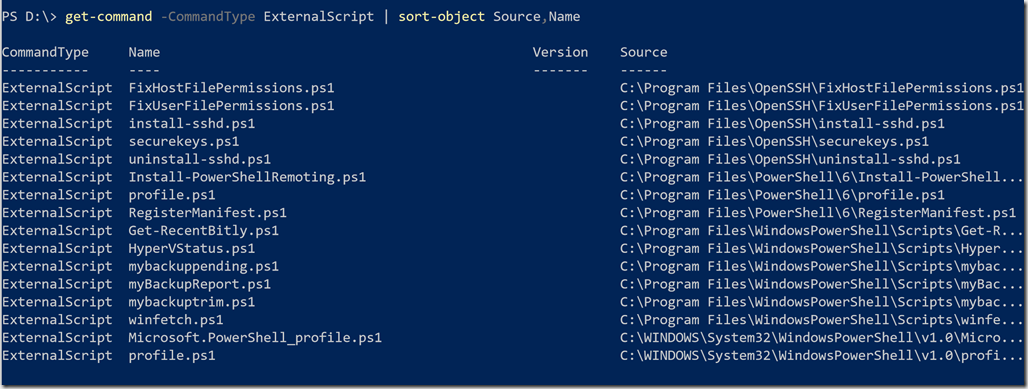

I created links to my backup control scripts. Use Get-Command to discover external scripts.

Don't be afraid to create an take advantage of PowerShell controller scripts. These are tools for you to use to make your job easier. As long as you understand their purpose.

Very nice and informative 👍

Just wondering what is the advantage of using symbolic links and aliases over wrapping the code in functions within modules and then move these modules over to \Documents\WindowsPowershell\Modules where they would be auto imported to every Powershell session.

I think it comes down to if you are wanting to make a tool or command available like Get-Unicorn or control script that uses the command. In my case, I have code in a script file – not a function – that I want to make available to me everywhere.