A few weeks ago, a new Iron Scripter PowerShell scripting challenge was issued. For this challenge we were asked to write some PowerShell code that we could use to inventory our PowerShell script library. Here's how I approached the problem, which by no means is the only way.

ManageEngine ADManager Plus - Download Free Trial

Exclusive offer on ADManager Plus for US and UK regions. Claim now!

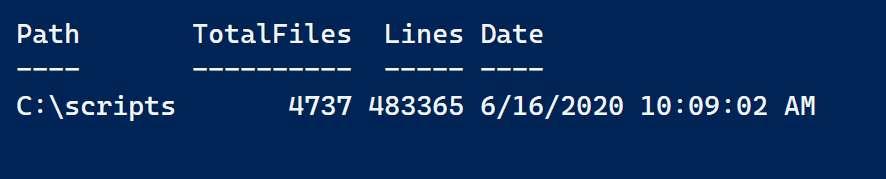

Lines of Code

The first part of the challenge was to count the number of lines of code in PowerShell script files. Any file with a PowerShell-related extension. This is going to require several steps.

- Get the PowerShell files

- Get the content of each file

- Count the number of lines.

Get-ChildItem is the likely command to list all the files. Get-Content is the obvious choice to read each file. For counting, Measure-Object has parameters to measure lines of text in a file. But you won't know that unless you are in the habit of reading help files. Here's a possible solution.

$Path = "C:\scripts"

Get-ChildItem -Path $Path -file -Recurse -Filter "*.ps*" |

Where-Object {$_.Extension -match "\.ps(m)?1$"} -outvariable o |

Get-Content | Measure-Object -line |

Select-Object -Property @{Name="Path";Expression={$Path}},

@{Name="TotalFiles";Expression = {$o.count}},Lines,

@{Name="Date";Expression = {Get-Date}}

This took about 35 seconds to run. I could probably improve performance by using .NET classes directly, but I wanted to stick to cmdlets where I could. By the way, you'll notice I'm essentially filtering twice. You always want to filter as early in your expression as you can. I'm using the -Filter parameter to get what should be PowerShell files. This does the bulk of the filtering. Where-Object is filtering out the odd files like foo.psx using a regular expression pattern.

Here's a multi-step equivalent.

$Path = "C:\scripts"

$files = Get-ChildItem -Path $Path -file -Recurse -Filter "*.ps*" |

Where-Object {$_.Extension -match "\.ps(m)?1$"}

$measure = $files | Get-Content | Measure-Object -line

[pscustomobject]@{

Computername = $env:COMPUTERNAME

Date = (Get-Date)

Path = $Path

TotalFiles = $files.count

TotalLines = $measure.lines

}

Same information structured a different way.

Command Inventory

So I have a little over 4700 files. These files go back to 2006. What commands have I been using? That was the second part of the challenge.

My intial thought was to follow a process like this:

- Get a list of all possible commands

- Get all PowerShell files

- Go through the content of each file

- Match content to command names with Select-String

This brute force approach went something like this:

#brute force approach

#filter out location changing functions

$cmds = (Get-Command -CommandType Cmdlet,Function).Name.where({$_ -notmatch ":|\\|\.\."}) | Get-Unique

$cmds| foreach-object -Begin {$cmdHash = @{}} -process {

if (-Not $cmdhash.ContainsKey($_)) {$cmdHash.Add($_,0) }

}

$Path = "C:\scripts"

$files = (Get-ChildItem -Path $Path -file -Recurse -Filter "*.ps*").Where({$_.Extension -match "\.ps(m)?1$"})

[string[]]$keys = $cmdHash.Keys

foreach ($file in $files) {

foreach ($key in $keys) {

if (Select-String -path $file.fullname -pattern $key -Quiet) {

$cmdhash.item($key)++

}

} #foreach key

} #foreach file

($cmdhash.GetEnumerator()).WHERE({$_.value -gt 0}) | sort-object -Property Value -Descending | select-object -first 100

But this didn't scale at all and took way too long to run to be practical. Then I thought, why not use one gigantic regex pattern?

$r = @{}

$pester = (get-command -module pester).name

$cmds2 = $cmds.where({$pester -notcontains $_})

$pattern = $cmds2 -join "|"

[System.Text.RegularExpressions.Regex]::Matches((Get-Content $files[1].FullName),"$pattern","IgnoreCase") |

Foreach-object {

if ($r.ContainsKey($_.value)) {

$r.item($($_.value))++

}

else {

$r.add($($_.value),1)

}

}

$r.GetEnumerator() | Sort-Object Value -descending | Select-Object -first 25

This is using $cmds from the previous example. I also realized I need to filter out the Pester module because commands like It and Should were being falsely detected. This approach was fine for 10 files. It finished in about 9 seconds. It took about 2 minutes to do 1000 files. But all 4800 files took over 2 hours. Sure, I could run as a job and get the results later but I wanted better.

Using the AST

So I turned to the AST. This is how PowerShell processes code under the hood. I started out with a proof-of-concept using a single file.

New-Variable astTokens -force

New-Variable astErr -force

$path = 'C:\scripts\JDH-Functions.ps1'

$AST = [System.Management.Automation.Language.Parser]::ParseFile($Path,[ref]$astTokens,[ref]$astErr)

$found = $AST.FindAll(

{$args[0] -is [System.Management.Automation.Language.CommandAst]},

$true

)

The $asttokens variable contains an object for the different command and language elements detected. All I needed to do was do a little filtering, grouping and sorting.

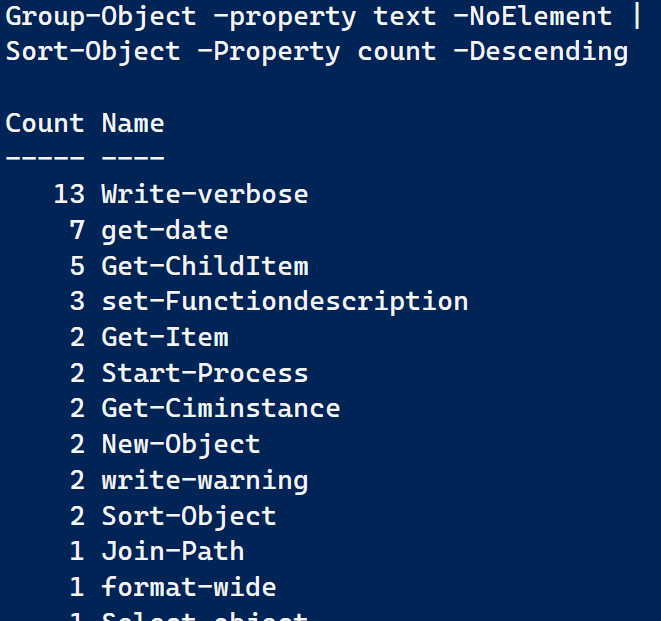

($asttokens).where({$_.tokenFlags -eq "commandname" -AND (-Not $_.nestedtokens)}) |

Select-Object -property text |

Group-Object -property text -NoElement |

Sort-Object -Property count -Descending

These are results for a single file. With a little tweaking, I came up with this function.

These are results for a single file. With a little tweaking, I came up with this function.

Function Measure-ScriptFile {

[cmdletbinding()]

[alias("msf")]

Param(

[Parameter(Mandatory, ValueFromPipeline, ValueFromPipelineByPropertyName)]

[alias("fullname")]

[string]$Path

)

Begin {

Write-Verbose "[BEGIN ] Starting: $($MyInvocation.Mycommand)"

$countHash = @{}

#get all commands

#Write-Verbose "[BEGIN ] Building command inventory"

#$cmds = (Get-Command -commandtype Filter, Function, Cmdlet).Name

#Write-Verbose "[BEGIN ] Using $($cmds.count) command names"

New-Variable astTokens -force

New-Variable astErr -force

} #begin

Process {

$AST = [System.Management.Automation.Language.Parser]::ParseFile($Path, [ref]$astTokens, [ref]$astErr)

[void]$AST.FindAll({$args[0] -is [System.Management.Automation.Language.CommandAst]}, $true)

#filter for commands but not file system commands like notepad.exe

($asttokens).where( {$_.tokenFlags -eq "commandname" -AND (-Not $_.nestedtokens) -AND ($_.text -notmatch "\.")}) |

ForEach-Object {

#resolve alias

$token = $_

if ($_.kind -eq 'identifier') {

Try {

$value = (Get-Command -Name $_.text -ErrorAction stop).ResolvedCommandName

}

Catch {

#ignore the error

$msg = "Unresolved: text:{1} in $filename" -f $filename, $token.text

Write-Verbose $msg

$value = $null

}

} #if identifier

elseif ($token.text -eq '?') {

$Value = 'Where-Object'

}

elseif ($token.text -eq '%') {

$value = 'ForEach-Object'

}

#test if the text looks like a command name

elseif ($token.text -match "\w+-\w+") {

$value = $token.text

}

<#

Use if testing for actual commands

elseif ($cmds -contains $token.text) {

$value = $token.text

}

#>

#add the value to the counting hashtable

if ($value) {

if ($countHash.ContainsKey($value)) {

$countHash.item($value)++

}

else {

$countHash.add($value, 1)

}

} #if $value

} #foreach

} #process

End {

$countHash

Write-Verbose "[END ] Ending: $($MyInvocation.Mycommand)"

} #end

}

The function will resolve aliases and builds a command table if the text looks like a command. That is, with Verb-Noun naming convention. I have code that tests each possbile command against the list of command names built from Get-Command. But this adds at least 30+ seconds to the processing time. I apparently have over 16K commands in and that takes time to count.

I can run it like this:

$Path = "C:\scripts"

(Get-ChildItem -Path $Path -file -Recurse -Filter "*.ps*").Where({$_.Extension -match "\.ps(m)?1$"}) | Measure-ScriptFile

This still takes time. Over 45 minutes but definitely faster. That averages out to just under 2 seconds per file which isn't too bad.

Parallel

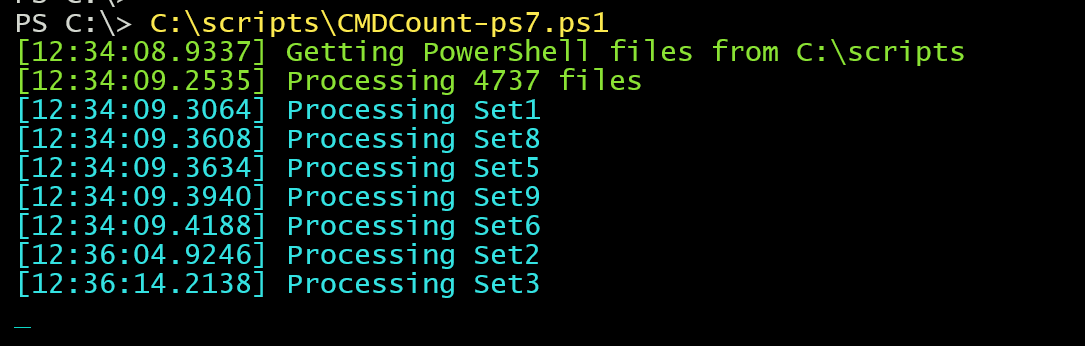

Now the big question becomes, is this a case where the new -Parallel feature in PowerShell 7 can come to the party? The answer is a cautious "maybe". Remember that there is overhead in setting up and tearing down the parallel processing. You need to make sure that your parallel operation is "cost-effective".

It would make no sense to process individual files in parallel. It would take at least a second or two to account for the overhead which is as long at it takes my function to process an average file. My solution was to divide my files into sets of 500 files and then process each set in parallel.

#requires -version 7.0

[cmdletbinding()]

Param([string]$Path = "C:\scripts",[int]$ThrottleLimit = 5)

Write-Host "Getting PowerShell files from $Path" -ForegroundColor Green

$files = (Get-ChildItem -Path $Path -file -Recurse -Filter "*.ps*").Where({$_.Extension -match "\.ps(m)?1$"})

Write-Host "Processing $($files.count) files" -ForegroundColor Green

#divide the files into sets

$sets = @{}

$c=0

for ($i = 0 ; $i -lt $files.count; $i+=500) {

$c++

$start = $i

$end = $i+499

$sets.Add("Set$C",@($files[$start..$end]))

}

#process the file sets in parallel

$results = $sets.GetEnumerator() |

ForEach-Object -throttlelimit $ThrottleLimit -parallel {

. C:\scripts\Measure-ScriptFile.ps1

Write-Host "Processing $($_.name)" -fore cyan

$_.value | Measure-ScriptFile

}

#assemble the results into a single data set.

$data = @{}

foreach ($result in $results) {

$result.getenumerator().foreach({

if ($data.containskey($_.name)) {

$data.item($_.name)+=$_.value

}

else {

$data.add($_.name,$_.value)

}

})

}

#the unified results

$data

I added the Write-Host lines so I could see the parallel processing in action.

I added the Write-Host lines so I could see the parallel processing in action.

My function includes the ThrottleLimit parameter which gets passed to ForEach-Object. I tried my script with different throttle values and it didn't really make much of a difference for this task. But it was significantly faster! Processing time for almost 5000 files was about 10 minutes!

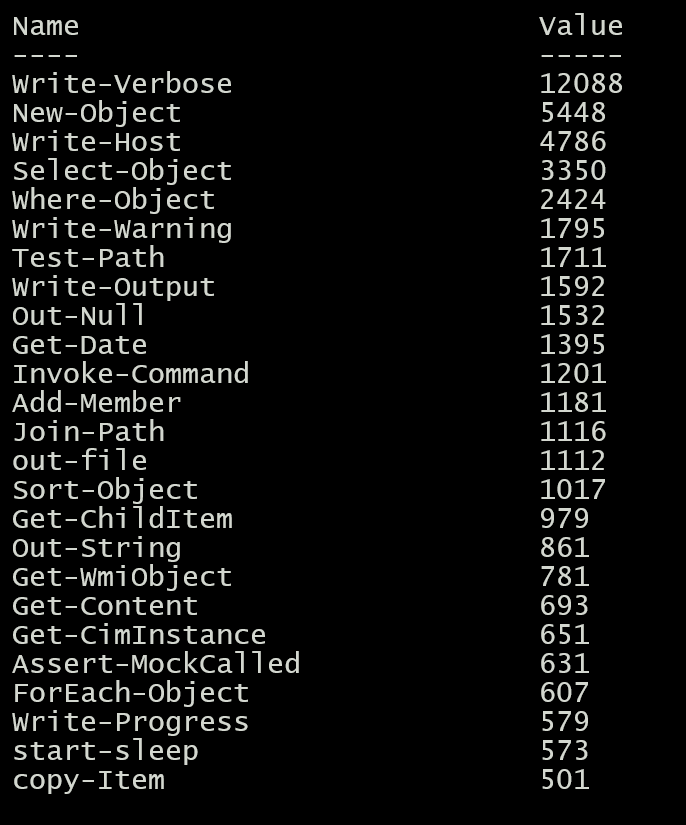

And this is apparently what I use the most in my PowerShell work. Here are the top 25 commands.

All from an overall result of 2422 commands.

This is not an absolute list of course. It doesn't take into account commands from modules that no longer exist on my computer or from non-standard command names I might have used, such as for private functions. But it should be close enough.

I don't think I solved everything in the challenge, but this is a good place to start. I'll let you play with the code and see what kind of results you get.

Comments and questions are always welcome.

Update

Check out the expanded version of this code, now packaged as a PowerShell module at https://jdhitsolutions.com/blog/powershell/7559/an-expanded-powershell-scripting-inventory-tool/

2 thoughts on “Building a PowerShell Inventory”

Comments are closed.