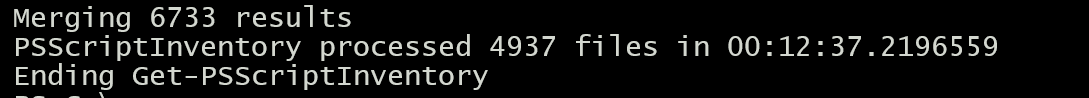

The other day I shared my code that I worked up to solve an Iron Scripter PowerShell challenge. One of the shortcomings was that I didn't address a challenge to include a property that would indicate what file was using a given command. I also felt I could do better with performance in Windows PowerShell on large file sets. I'm trying to process almost 5000 PowerShell files.

ManageEngine ADManager Plus - Download Free Trial

Exclusive offer on ADManager Plus for US and UK regions. Claim now!

A Module is Born

The first thing I did was re-organize my code into a PowerShell module. This allowed me to break out some code into separate functions like this.

Function Get-ASTToken {

[CmdletBinding()]

Param (

[Parameter(Mandatory, Position = 0)]

[string]$Path

)

Begin {

Write-Verbose "[BEGIN ] Starting: $($MyInvocation.Mycommand)"

New-Variable astTokens -force

New-Variable astErr -force

} #begin

Process {

$cPath = Convert-Path $Path

Write-Verbose "[PROCESS] Getting AST Tokens from $cpath"

$AST = [System.Management.Automation.Language.Parser]::ParseFile($cPath, [ref]$astTokens, [ref]$astErr)

[void]$AST.FindAll({$args[0] -is [System.Management.Automation.Language.CommandAst]}, $true)

$astTokens

} #process

End {

Write-Verbose "[END ] Ending: $($MyInvocation.Mycommand)"

} #end

}

One advantage is that I can now build a Pester test. You can't Pester test code that invokes the .NET Framework, but I can test if a PowerShell function is called. Were I to build tests, i could mock a result from this function.

I also am now defining a class for my custom object.

class PSInventory {

[string]$Name

[int32]$Total

[string[]]$Files

hidden [datetime]$Date = (Get-Date)

hidden [string]$Computername = [System.Environment]::MachineName

PSInventory([string]$Name) {

$this.Name = $Name

}

}

In my code, I can create a result like this:

$item = [PSInventory]::new($TextInfo.ToTitleCase($Value.tolower()))

As an aside, one of the things that bugged me with my original code was that the command name was displayed as it was found. I have not been consistent over the years so that meant seeing output like 'Write-verbose' and 'select-Object'. My solution was to use a trick my friend Gladys Kravitz taught me using a TextInfo object.

$TextInfo = (Get-Culture).TextInfo

This object has a ToTitleCase() method which I am invoking. To ensure consistency, I turn the $Value variable, which contains the derived command name, to lower case which is then turned to TitleCase. Nice.

Parallel and Jobs

The module also contains a controller function, although it could also have been a script, that uses the module commands to process a given folder.

Function Get-PSScriptInventory {

[cmdletbinding()]

[alias("psi")]

[OutputType("PSInventory")]

Param(

[Parameter(Position = 0, Mandatory, HelpMessage = "Specify the root folder path")]

[string]$Path,

[Parameter(HelpMessage = "Recurse for files")]

[switch]$Recurse,

[Parameter(HelpMessage = "Specify the number of files to batch process. A value of 0 means do not run in batches or parallel.")]

[ValidateSet(0,50,100,250,500)]

[int]$BatchSize = 0

)

The function includes a parameter to indicate if you want to "batch process" the folder. This only makes sense if you have a very large number of files.

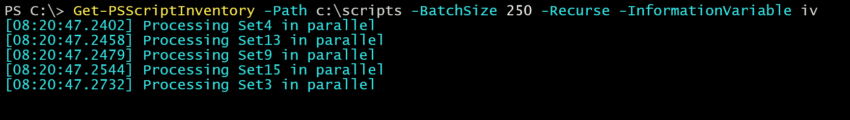

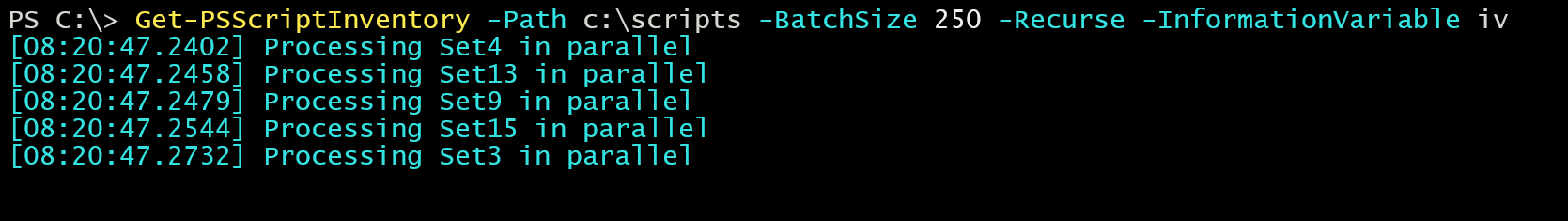

If you are running PowerShell 7, the files will be divided into batches and each batch processed in parallel.

if ($IsCoreCLR) {

Write-Verbose "[PROCESS] Processing in parallel"

$files = Get-PSFile -Path $Path -Recurse:$Recurse

$totalfilecount = $files.count

$sets = @{}

$c = 0

for ($i = 0 ; $i -lt $files.count; $i += $batchsize) {

$c++

$start = $i

$end = $i + ($BatchSize-1)

$sets.Add("Set$C", @($files[$start..$end]))

}

$results = $sets.GetEnumerator() | ForEach-Object -Parallel {

Write-Host "[$(Get-Date -format 'hh:mm:ss.ffff')] Processing $($_.name) in parallel" -ForegroundColor cyan

Write-Information "Processing $($_.name)" -tags meta

$_.value | Measure-ScriptFile

}

} #coreCLR

You can see in the code snippet that I'm using Write-Information. I can look at the tags later and see exactly how long this process took.

You can see in the code snippet that I'm using Write-Information. I can look at the tags later and see exactly how long this process took.

In Windows PowerShell, I'm dividing the files into batches than then spinning of a thread job.

In Windows PowerShell, I'm dividing the files into batches than then spinning of a thread job.

ForEach-Object -begin {

$totalfilecount = 0

$tmp = [System.Collections.Generic.List[object]]::new()

$jobs = @()

#define the scriptblock to run in a thread job

$sb = {

Param([object[]]$Files)

$files | Measure-ScriptFile

}

} -process {

if ($tmp.Count -ge $batchsize) {

Write-Host "[$(Get-Date -format 'hh:mm:ss.ffff')] Processing set of $($tmp.count) files" -ForegroundColor cyan

Write-Information "Starting threadjob" -Tags meta

$jobs += Start-ThreadJob -ScriptBlock $sb -ArgumentList @(, $tmp.ToArray()) -Name tmpJob

$tmp.Clear()

}

$totalfilecount++

$tmp.Add($_)

} -end {

#use the remaining objects

Write-Host "[$(Get-Date -format 'hh:mm:ss.ffff')] Processing remaining set of $($tmp.count) of files" -ForegroundColor cyan

$jobs += Start-ThreadJob -ScriptBlock $sb -ArgumentList @(, $tmp.ToArray()) -name tmpJob

}

#wait for jobs to complete

Write-Verbose "[PROCESS] Waiting for $($jobs.count) jobs to complete"

$results = $jobs | Wait-Job | Receive-Job

This is similar to a background job, but appears to run a bit faster. This requires the ThreadJob module from the PowerShell Gallery. Even so, this is still slower in Windows PowerShell. I'm betting that improvements were made to the AST in PowerShell 7, in addition to other performance improvements. Still, you can look at this code a a proof of concept.

In fact, this entire module, which I hope you'll try out, is intended for educational purposes and to serve as a proof-of-concept platform. I hope you'll look at how I structured the module. How I handle cross-platform needs. How I used commands like Write-Information. How I used a PowerShell class.

You can find this code on Github at https://github.com/jdhitsolutions/PSScriptingInventory. If you have comments, questions or problems feel free to post an issue. I also hope you'll try your hand at the Iron Scripter challenges.