I am always looking for opportunities to use PowerShell in a way that adds value to my work. And hopefully yours. This is one of the reasons it is worth the time and effort to learn PowerShell. It can be used in so many ways beyond the out-of-the-box commands. Once you understand the PowerShell language and embrace the paradigm of objects in the pipeline, PowerShell offers unlimited possibilities. In my case, I wanted to do more with the native Import-Csv command. I wanted to keep the original functionality, but I wanted it to do more. Here's how I did it. Even if you don't have a need for the end result, I hope you'll pay attention to the PowerShell scripting techniques and concepts. These are items that you can apply to your own work.

ManageEngine ADManager Plus - Download Free Trial

Exclusive offer on ADManager Plus for US and UK regions. Claim now!

Copy-Command

I started by using the Copy-Command function from the PSScriptTools module. I knew I wanted to add some parameters and functionality. I originally thought I'd create a proxy function and replace Import-CSV with my custom version. But ultimately decided to create a "wrapper" function. This type of function shares most, if not all, of the parameters of a target command which are passed to it. The Copy-Command function copies the parameters from the original command to your new command.

Adjusting Parameters

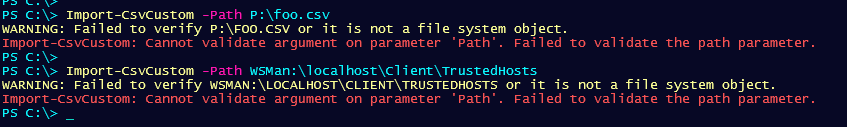

Once I had the new parameters, I began tweaking. First, I got rid of the LiteralPath parameter and combined it with the Path parameter. In essence, I'm treating every path as a literal path. I also added custom parameter validation.

[Parameter(

Position = 0,

Mandatory,

ValueFromPipeline,

ValueFromPipelineByPropertyName,

HelpMessage = "The path to the CSV file. Every path is treated as a literal path."

)]

[ValidateNotNullOrEmpty()]

#Validate file exists

[ValidateScript({

If ((Test-Path $_) -AND ((Get-Item $_).PSProvider.Name -eq 'FileSystem')) {

$True

}

else {

Write-Warning "Failed to verify $($_.ToUpper()) or it is not a file system object."

Throw "Failed to validate the path parameter."

$False

}

})]

[Alias("PSPath")]

[string[]]$Path,The validation script verifies the path and that it is a file system object.

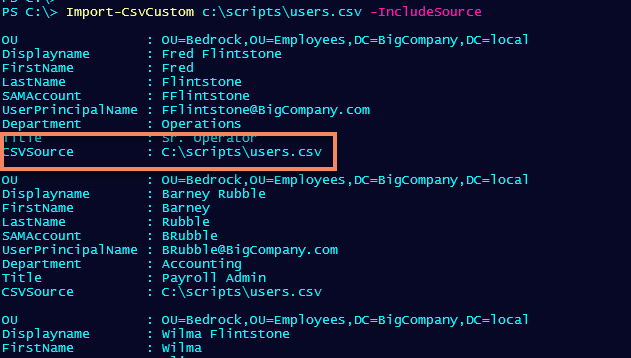

I also knew there were two optional features I wanted to add when importing CSV data. First, I wanted to capture the source file. I wanted it to be another property in the custom output.

[Parameter(HelpMessage = "Add a custom property to reflect the import source file.")][switch]$IncludeSource,I also wanted the ability to define a typename. When you import data from a CSV file, PowerShell writes a generic custom object to the pipeline. But if I give it a typename, and use custom format files (which you know I do), I can improve the import experience. We'll get to that in a bit.

System.Collections.Generic.List[]

Both of these actions will require that I modify the data after it has been imported with the native Import-Csv command. Remember, I'm writing a wrapper function that offers the same functionality plus my additions. Because I need to modify objects after the import and before I write them to the pipeline, I need a temporary place to hold them. Technically, an array would work. I could initialize an empty array and then add the imported items to the array. However, technically, when you add an item to an array, PowerShell is destroying and recreating the array. For small datasets, this is not that critical. But I like to practice efficiency when I can, so I've been using generic lists.

You can define a new list object like this:

$in = [System.Collections.Generic.List[object]]::New()You can specify what type of object is in the list, such as string, or in my case the generic 'object'. In my function, I'm taking advantage of the Using statement. Before the function I have this line of code:

Using Namespace System.Collections.GenericIn my function, this simplifies the code necessary to create the list object.

$in = [List[object]]::New()You can add a single object to the list with the Add() method, or multiple all at once with AddRange(). I haven't found much difference between the two so to keep it simple, I'll add each imported item to the list.

Microsoft.PowerShell.Utility\Import-Csv @PSBoundParameters | ForEach-Object { $in.Add($_) }Notice that I'm splatting PSBoundParameters to the native command. I'm using the fully qualified command name. This allows me to call my function Import-Csv which would take precedence at a command prompt. By the way, I'm not. My function is called Import-CsvCustom, but I wanted to include this in case you decide to rename and to demonstrate a scripting concept.

Customizing Objects

With all of the imported data in the list, I can now modify the objects. If the user-specified to include the source path, I'll add a note property to the objects in the list.

if ($IncludeSource) {

Write-Verbose "[PROCESS] Adding CSVSource property"

$in | Add-Member -MemberType NoteProperty -Name CSVSource -Value $cPath -Force

}

I'm toying with some ideas on how to make this a hidden property and to let me specify the property name. It is unlikely I'll have a column called CSVSource in any of my CSV files. But as a rule, I like to have flexibility.

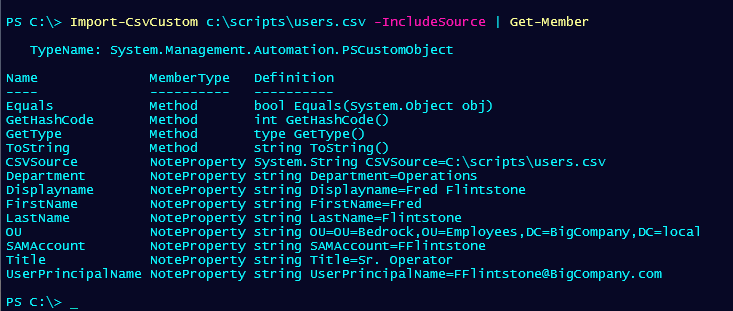

As I mentioned, when you import a CSV file, you get generic custom objects.

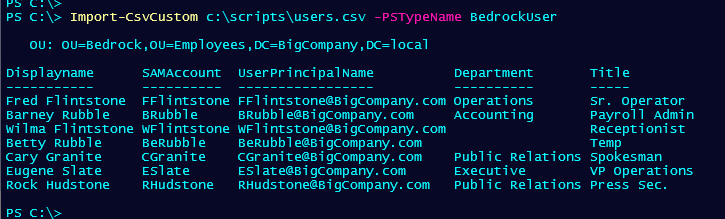

And as you can see in the previous image, I get a list of all objects and properties. But what if I had a custom format file that I've loaded into my session?

Update-FormatData C:\scripts\bedrock.format.ps1xmlNow, I can import the data and assign a type name.

Import-CsvCustom

Want to try for yourself?

#requires -version 5.1

<#

This is a copy of:

CommandType Name Version Source

----------- ---- ------- ------

Cmdlet Import-Csv 3.1.0.0 Microsoft.PowerShell.Utility

Created: 17 May 2021

Author : Jeff Hicks

Learn more about PowerShell: https://jdhitsolutions.com/blog/essential-powershell-resources/

#>

<#

I am using a namespace to make defining a List[] object easier later

in the script.

#>

Using Namespace System.Collections.Generic

Function Import-CSVCustom {

#TODO - Add comment-based help

[CmdletBinding(DefaultParameterSetName = 'Delimiter')]

Param(

[Parameter(ParameterSetName = 'Delimiter', Position = 1)]

[ValidateNotNull()]

[char]$Delimiter,

[Parameter(

Position = 0,

Mandatory,

ValueFromPipeline,

ValueFromPipelineByPropertyName,

HelpMessage = "The path to the CSV file. Every path is treated as a literal path."

)]

[ValidateNotNullOrEmpty()]

#Validate file exists

[ValidateScript({

If ((Test-Path $_) -AND ((Get-Item $_).PSProvider.Name -eq 'FileSystem')) {

$True

}

else {

Write-Warning "Failed to verify $($_.ToUpper()) or it is not a file system object."

Throw "Failed to validate the path parameter."

$False

}

})]

[Alias("PSPath")]

[string[]]$Path,

[Parameter(ParameterSetName = 'UseCulture', Mandatory)]

[ValidateNotNull()]

[switch]$UseCulture,

[ValidateNotNullOrEmpty()]

[string[]]$Header,

[ValidateSet('Unicode', 'UTF7', 'UTF8', 'ASCII', 'UTF32', 'BigEndianUnicode', 'Default', 'OEM')]

[string]$Encoding,

[Parameter(HelpMessage = "Add a custom property to reflect the import source file.")]

[switch]$IncludeSource,

[Parameter(HelpMessage = "Insert an optional custom type name.")]

[ValidateNotNullOrEmpty()]

[string]$PSTypeName

)

Begin {

Write-Verbose "[BEGIN ] Starting $($MyInvocation.Mycommand)"

Write-Verbose "[BEGIN ] Using parameter set $($PSCmdlet.ParameterSetName)"

Write-Verbose ($PSBoundParameters | Out-String)

#remove parameters that don't belong to the native Import-Csv command

if ($PSBoundParameters.ContainsKey("IncludeSource")) {

[void]$PSBoundParameters.Remove("IncludeSource")

}

if ($PSBoundParameters.ContainsKey("PSTypeName")) {

[void]$PSBoundParameters.Remove("PSTypeName")

}

} #begin

Process {

<#

Initialize a generic list to hold each imported object so it can be

processed for CSVSource and/or typename

#>

$in = [List[object]]::New()

#convert the path value to a complete filesystem path

$cPath = Convert-Path -Path $Path

#update the value of the PSBoundparameter

$PSBoundParameters["Path"] = $cPath

Write-Verbose "[PROCESS] Importing from $cPath"

<#

Add each imported item to the collection.

It is theoretically possible to have a CSV file of 1 object, so

instead of testing to determine whether to use Add() or AddRange(),

I'll simply Add each item.

I'm using the fully qualified cmdlet name in case I want this function

to become my Import-Csv command.

#>

Microsoft.PowerShell.Utility\Import-Csv @PSBoundParameters | ForEach-Object { $in.Add($_) }

Write-Verbose "[PROCESS] Post-processing $($in.count) objects"

if ($IncludeSource) {

Write-Verbose "[PROCESS] Adding CSVSource property"

$in | Add-Member -MemberType NoteProperty -Name CSVSource -Value $cPath -Force

}

if ($PSTypeName) {

Write-Verbose "[PROCESS] Adding PSTypename $PSTypeName"

$($in).foreach({ $_.psobject.typenames.insert(0, $PSTypeName)})

}

#write the results to the pipeline

$in

} #process

End {

Write-Verbose "[END ] Ending $($MyInvocation.Mycommand)"

} #end

} #end Import-CsvCustomSave the code to a file that you can dot source in your PowerShell session. Then import CSV files and try it out. The function should work in Windows PowerShell and PowerShell 7.x. The function includes Verbose output and hopefully plenty of internal documentation to help you understand what I am doing.

I hope you find this useful, or at least informative. And just maybe you too will begin thinking of ways to use PowerShell to do more.