Let's continue exploring my PowerShell based backup system. If you are just jumping in, be sure to read part 1 and part 2 first. At the end of the previous article I have set up a scheduled job that is logging changed files in key folders to CSV files. The next order of business is to take the data in these files and create my incremental backup. I'm using WinRar as my archiving tool, but you can use whatever command-line application you choose.

ManageEngine ADManager Plus - Download Free Trial

Exclusive offer on ADManager Plus for US and UK regions. Claim now!

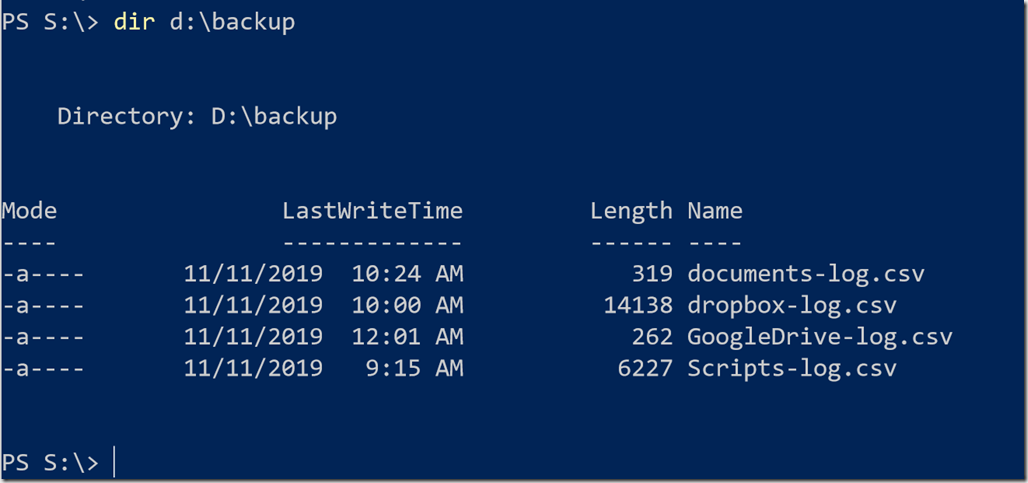

The CSV Files

My scheduled PowerShell job that is running constantly is creating CSV files with new and changed files.

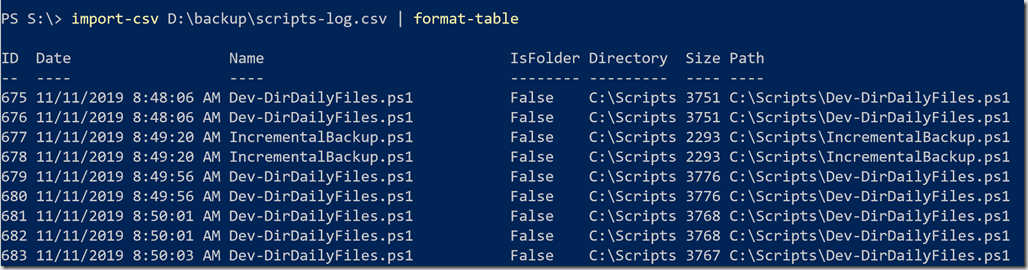

The file contents include an entry every time the FileSystemWatcher detected an event.

As you can see, there are often multiple entries for the same file. That's OK. I'll handle that with the backup script.

Backing Up

To create the incremental backups, I need to import each CSV file, filter out the temp files I don't want to backup, and select unique files. I'm also skipping 0 byte files.

$files = Import-Csv -path $path | Where-Object { ($_.name -notmatch "~|\.tmp") -AND ($_.size -gt 0) -AND ($_.IsFolder -eq 'False') -AND (Test-Path $_.path) } |

Select-Object -Property path, size, directory, isfolder, ID | Group-Object -Property Path

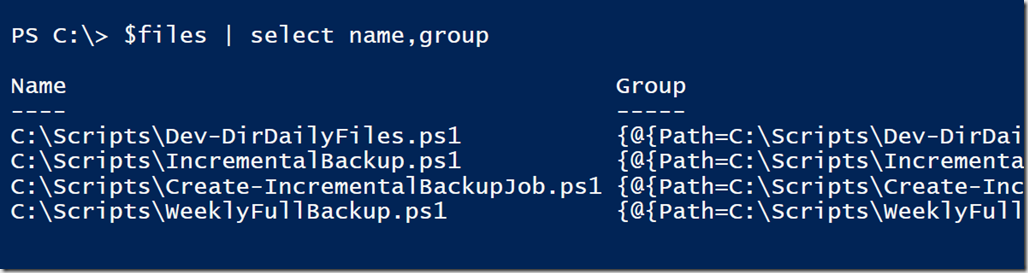

$Path is the path to one of the CSV files. You'll also notice that I"m testing if a file still exists. I may create a file, which would get logged, and delete it later in the day. Obviously it doesn't make sense to attempt to backup a non-existent file. I group the files on their full path name. Here's a peek at what that looks like.

What I need to backup are the files listed as the Name property. For each Name, I'll create a temporary folder and underneath that a subfolder that contains the path of the file. I then copy each file to this temporary location.

foreach ($file in $files) {

$parentFolder = $file.group[0].directory

$relPath = Join-Path -Path "D:\backtemp\$Name" -childpath $parentFolder.Substring(3)

if (-Not (Test-Path -path $relpath)) {

Write-Host "Creating $relpath" -ForegroundColor cyan

$new = New-Item -path $relpath -ItemType directory -force

# Start-Sleep -Milliseconds 100

#copy hidden attributes

$attrib = (Get-Item $parentfolder -force).Attributes

if ($attrib -match "hidden") {

Write-Host "Copying attributes from $parentfolder to $($new.FullName)" -ForegroundColor yellow

Write-Host $attrib -ForegroundColor yellow

(Get-Item $new.FullName -force).Attributes = $attrib

}

}

Write-Host "Copying $($file.name) to $relpath" -ForegroundColor green

$f = Copy-Item -Path $file.Name -Destination $relpath -Force -PassThru

#copy attributes

$f.Attributes = (Get-Item $file.name -force).Attributes

} #foreach file

Because I'm trying to recreate the folder structure in the archive I also copy attributes from the parent folder of each file. This allows me to save the .git folders as hidden in my backup. I can then archive each folder and remove the temporary items and the CSV file so I can repeat the process with a fresh start.

Here's the complete incremental backup script.

Import-Module C:\scripts\PSRAR\Dev-PSRar.psm1 -force

$paths = Get-ChildItem -Path D:\Backup\*.csv | Select-Object -ExpandProperty Fullname

foreach ($path in $paths) {

$name = (Split-Path -Path $Path -Leaf).split("-")[0]

$files = Import-Csv -path $path | Where-Object { ($_.name -notmatch "~|\.tmp") -AND ($_.size -gt 0) -AND ($_.IsFolder -eq 'False') -AND (Test-Path $_.path) } |

Select-Object -Property path, size, directory, isfolder, ID | Group-Object -Property Path

foreach ($file in $files) {

$parentFolder = $file.group[0].directory

$relPath = Join-Path -Path "D:\backtemp\$Name" -childpath $parentFolder.Substring(3)

if (-Not (Test-Path -path $relpath)) {

Write-Host "Creating $relpath" -ForegroundColor cyan

$new = New-Item -path $relpath -ItemType directory -force

# Start-Sleep -Milliseconds 100

#copy hidden attributes

$attrib = (Get-Item $parentfolder -force).Attributes

if ($attrib -match "hidden") {

Write-Host "Copying attributes from $parentfolder to $($new.FullName)" -ForegroundColor yellow

Write-Host $attrib -ForegroundColor yellow

(Get-Item $new.FullName -force).Attributes = $attrib

}

}

Write-Host "Copying $($file.name) to $relpath" -ForegroundColor green

$f = Copy-Item -Path $file.Name -Destination $relpath -Force -PassThru

#copy attributes

$f.Attributes = (Get-Item $file.name -force).Attributes

} #foreach file

#create a RAR archive or substitute your archiving code

$archive = Join-Path D:\BackTemp -ChildPath "$(Get-Date -Format yyyyMMdd)_$name-INCREMENTAL.rar"

Add-RARContent -Object $relPath -Archive $archive -CompressionLevel 5 -Comment "Incremental backup $(Get-Date)"

Move-Item -Path $archive -Destination \\ds416\backup -Force

Remove-Item $relPath -Force -Recurse

Remove-Item $path

} #foreach path

Creating the Scheduled Job

The last part of the process is to setup another PowerShell scheduled job to process the CSV files and backup the files. I run my weekly full backup on Friday nights at 10:00PM. I still need to share that code with you in a future article. This means I can skip running an incremental backup on Friday night. I use this code to create the PowerShell scheduled job.

$filepath = "C:\scripts\IncrementalBackup.ps1"

if (Test-Path $filepath) {

$trigger = New-JobTrigger -At 10:00PM -DaysOfWeek Saturday,Sunday,Monday,Tuesday,Wednesday,Thursday -Weekly

$jobOpt = New-ScheduledJobOption -RunElevated -RequireNetwork -WakeToRun

Register-ScheduledJob -FilePath $filepath -Name "DailyIncremental" -Trigger $trigger -ScheduledJobOption $jobOpt -MaxResultCount 7 -Credential jeff

}

else {

Write-Warning "Can't find $filepath"

}

Because I am copying files to my NAS device, I need to include a credential.

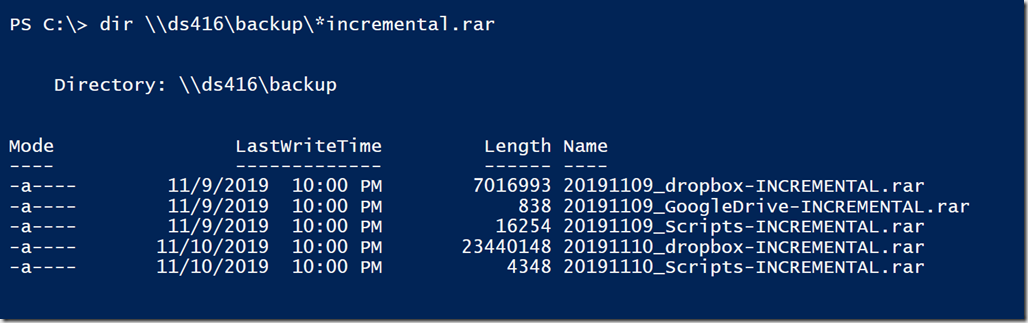

Here are some of my recent results.

Next Time

In the next article in this series I'll go over the full backup code I'm using, plus a few management control scripts that help me keep on top of the process.

Could the switch -NoQualifier (in Split-Path) help to recreate the path in the temp folder?

That looks like it would be a good suggestion.