Yesterday I began a series of articles documenting my PowerShell based backup system. The core of my system is using the System.IO.FileSystemWatcher as a means to track daily file changes so I know what to backup. However there are some challenges. I need to watch several folders, I need to have an easy way to know what to back up, and it needs to happen automatically without any effort on my part such as starting PowerShell and kicking off a process. My solution is to leverage a PowerShell scheduled job.

ManageEngine ADManager Plus - Download Free Trial

Exclusive offer on ADManager Plus for US and UK regions. Claim now!

Creating the PowerShell Scheduled Job

A PowerShell Scheduled Job takes advantage of the Windows Task Scheduler to run a PowerShell scriptblock or script. You get the ease of using the task scheduler with the benefit or running PowerShell code. While it is simple enough to create a scheduled job from the console, I tend to save the command in a script file. Doing so provides me a documentation trail and makes it easier to recreate the job. While it is possible to edit an existing job, I find it easier to simply remove it and recreate it. Using a script file makes this an easy process.

My scheduled job needs an action to perform. For backup purposes this means creating a FileSystemWatcher to monitor each directory and a corresponding event subscription. Each event needs to be able to log the file changes so that I know what needs to be backed up incrementally. To begin, I created a text file with the paths to monitor.

#comment out paths with a # symbol at the beginning of the line C:\Scripts C:\users\jeff\documents D:\jdhit C:\users\jeff\dropbox C:\Users\Jeff\Google Drive

This is C:\Scripts\myBackupPaths.txt. In my scheduled job action I parse this file to get a list of paths.

if (Test-Path c:\scripts\myBackupPaths.txt) {

#filter out commented lines and lines with just white space

$paths = Get-Content c:\scripts\myBackupPaths.txt | Where-Object {$_ -match "(^[^#]\S*)" -and $_ -notmatch "^\s+$"}

}

else {

Throw "Failed to find c:\scripts\myBackupPaths.txt"

#bail out

Return

}

I added some filtering to strip out empty and commented lines. For each path, I'm going to create a watcher and event subscription. As I'll show you in a bit, when an event fires, meaning a file was changed, I intend to log it to a CSV file. The action for each event subscription will be to run a PowerShell script file.

Foreach ($Path in $Paths.Trim()) {

#get the directory name from the list of paths

$name = ((Split-Path $path -Leaf).replace(' ', ''))

#specify the directory for the CSV log files

$log = "D:\Backup\{0}-log.csv" -f $name

#define the watcher object

Write-Host "Creating a FileSystemWatcher for $Path" -ForegroundColor green

$watcher = [System.IO.FileSystemWatcher]($path)

$watcher.IncludeSubdirectories = $True

#enable the watcher

$watcher.EnableRaisingEvents = $True

#the Action scriptblock to be run when an event fires

$sbtext = "c:\scripts\LogBackupEntry.ps1 -event `$event -CSVPath $log"

$sb = [scriptblock]::Create($sbtext)

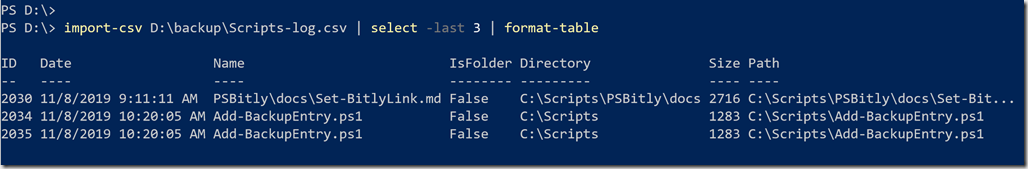

The log file uses the last part of each folder path. If the name has a space, like ;Google Drive', I replace the space so that the name becomes 'GoogleDrive'. Thus I'll end up with several CSV files like D:\Backup\Scripts-log.csv.

Logging Files

The scriptblock text uses variable expansion so that $log will be replaced with the actual log name. And I'm escaping $event so that the text will keep it with a $ symbol. When I create the scriptblock it will look like

C:\scripts\LogBackupEntry.ps1 -event $event D:\Backup\Scripts-log.csv

The $event will be the fired event object. This is the object you get from Get-Event. The event if passed to this script.

#requires -version 5.1

[cmdletbinding()]

Param(

[Parameter(Mandatory)]

[object]$Event,

[Parameter(Mandatory)]

[string]$CSVPath

)

#uncomment for debugging and testing

# this will create a serialized version of each fired event

# $event | export-clixml ([System.IO.Path]::GetTempFileName()).replace("tmp","xml")

if (Test-Path $event.SourceEventArgs.fullpath) {

$f = Get-Item -path $event.SourceEventArgs.fullpath -force

#only save files and not a temp file

if ((-Not $f.psiscontainer) -AND ($f.basename -notmatch "(^(~|__rar).*)|(.*\.tmp$)")) {

[pscustomobject]@{

ID = $event.EventIdentifier

Date = $event.timeGenerated

Name = $event.sourceEventArgs.Name

IsFolder = $f.PSisContainer

Directory = $f.DirectoryName

Size = $f.length

Path = $event.sourceEventArgs.FullPath

} | Export-Csv -NoTypeInformation -Path $CSVPath -Append

} #if not a container

} #if test-path

#end of script

The script file eventually will export a custom object to the appropriate CSV file. However, since sometimes a file may have been deleted after it was detected, such as a temp file, I use Test-Path to verify the file still exists. If it does, I get the file and then do some additional testing and filtering to only export files and only then if they aren't temp files. For example, when you are using PowerPoint, you'll get a number of temp files that will persist until you close the application. At that point the files are removed. I don't want to log those to the CSV file.

Interesting side note regarding PowerPoint - the actual pptx file is never detected as having changed. In other words, when I edit a PowerPoint presentation, the file never gets logged. But that's ok because I can always manually update the CSV file with additional files.

Function Add-BackupEntry {

[cmdletbinding(SupportsShouldProcess)]

Param(

[Parameter(Position = 0, Mandatory, ValueFromPipeline)]

[string]$Path,

[Parameter(Mandatory)]

[ValidateScript( { Test-Path $_ })]

[string]$CSVFile

)

Begin {

Write-Verbose "[BEGIN ] Starting: $($MyInvocation.Mycommand)"

$add = @()

} #begin

Process {

Write-Verbose "[PROCESS] Adding: $Path"

$file = Get-Item $Path

$add += [pscustomobject]@{

ID = 99999

Date = $file.LastWriteTime

Name = $file.name

IsFolder = "False"

Directory = $file.Directory

Size = $file.length

Path = $file.FullName

}

} #process

End {

Write-Verbose "[END ] Exporting to $CSVFile"

$add | Export-Csv -Path $CSVFile -Append -NoTypeInformation

Write-Verbose "[END ] Ending: $($MyInvocation.Mycommand)"

} #end

}

I can check the CSV file at any time to see what files are going to be backed up.

The FileSystemWatcher often fires several times for the same file. I'll handle that later.

Creating the Event Subscription

I still need to create the event subscription for each folder and watcher.

$params = @{

InputObject = $watcher

Eventname = "changed"

SourceIdentifier = "FileChange-$Name"

MessageData = "A file was created or changed in $Path"

Action = $sb

}

$params.MessageData | Out-String | Write-Host -ForegroundColor cyan

$params.Action | Out-String | Write-Host -ForegroundColor Cyan

Register-ObjectEvent @params

Remember, all of this is going to be running as part of a PowerShell scheduled job. In other words, a runspace. In order for the event subscriber to persist, the runspace has to keep running.

Do {

Start-Sleep -Seconds 1

} while ($True)

Maybe not the most elegant approach but it works.

Registering the PowerShell Scheduled Job

The last step is to create the scheduled job. Because I want this to run automatically and constantly, I created a job trigger to run the job at startup.

$trigger = New-JobTrigger -AtStartup Register-ScheduledJob -Name "DailyWatcher" -ScriptBlock $action -Trigger $trigger

I can now manually start the task in Task Scheduler or start it from PowerShell.

Get-ScheduledTask.ps1 DailyWatcher | Start-ScheduledTask

Here is my complete registration script.

#requires -version 5.1

#requires -module PSScheduledJob

#create filesystemwatcher job for my incremental backups.

#scheduled job scriptblock

$action = {

if (Test-Path c:\scripts\myBackupPaths.txt) {

#filter out commented lines and lines with just white space

$paths = Get-Content c:\scripts\myBackupPaths.txt | Where-Object {$_ -match "(^[^#]\S*)" -and $_ -notmatch "^\s+$"}

}

else {

Throw "Failed to find c:\scripts\myBackupPaths.txt"

#bail out

Return

}

#trim leading and trailing white spaces in each path

Foreach ($Path in $Paths.Trim()) {

#get the directory name from the list of paths

$name = ((Split-Path $path -Leaf).replace(' ', ''))

#specify the directory for the CSV log files

$log = "D:\Backup\{0}-log.csv" -f $name

#define the watcher object

Write-Host "Creating a FileSystemWatcher for $Path" -ForegroundColor green

$watcher = [System.IO.FileSystemWatcher]($path)

$watcher.IncludeSubdirectories = $True

#enable the watcher

$watcher.EnableRaisingEvents = $True

#the Action scriptblock to be run when an event fires

$sbtext = "c:\scripts\LogBackupEntry.ps1 -event `$event -CSVPath $log"

$sb = [scriptblock]::Create($sbtext)

#register the event subscriber

#possible events are Changed,Deleted,Created

$params = @{

InputObject = $watcher

Eventname = "changed"

SourceIdentifier = "FileChange-$Name"

MessageData = "A file was created or changed in $Path"

Action = $sb

}

$params.MessageData | Out-String | Write-Host -ForegroundColor cyan

$params.Action | Out-String | Write-Host -ForegroundColor Cyan

Register-ObjectEvent @params

} #foreach path

Get-EventSubscriber | Out-String | Write-Host -ForegroundColor yellow

#keep the job alive

Do {

Start-Sleep -Seconds 1

} while ($True)

} #close job action

$trigger = New-JobTrigger -AtStartup

Register-ScheduledJob -Name "DailyWatcher" -ScriptBlock $action -Trigger $trigger

Next Steps

At this point I have a PowerShell scheduled job running essentially in the background, monitoring folders for file changes and logging to a CSV file. Next time I'll walk you through how I use that data.

Great stuff here !

I’ve learned some techniques that may be of some use in my work !

Can’t wait to see the next post 😉

Oh, by the way, if someone wants to exploit the data with an archiver like 7zip, this simple script work (Waiting for a more sophisticate approach in the next post I’m sure of it)

This will get the files logged in the .csv an create an archive with these files. The “Get-Unique” command is here to cleanup the duplicate entries generated by FileSystemWatcher. Here it is :

________________________________

$changes = Import-Csv -Path “D:\backup\Work-log.csv”

$Array = $null

$Array = @()

Foreach ($change in $changes) {

$Array += ‘”‘ + $change.Path + ‘”‘

}

$Array = $Array | Get-Unique

$Date = Get-Date -Format yyyyMMdd

$Extension = “7z”

$Archive = “D:\Backup\$Date” + “_Backup.$Extension”

$7zexePath = “C:\Program Files\7-Zip\7z.exe”

[Array]$argumentList = “a”,”-t$Extension”, “$Archive”, “$Array”

Try {

Invoke-Expression “& `”$7zexePath`” $argumentList”

}

Catch {

Write-Warning “Error: $($_.Exception.Message)”

}