We're almost to the end of my PowerShell backup system. Last time I showed you how I handle my daily incremental backups. Today I figured I should circle back and go over how I handle weekly full backups. Remember, I am only concerned about backing up a handful of critical folders. I've saved that list to a file which I can update at any time should I need to start backing up something else.

ManageEngine ADManager Plus - Download Free Trial

Exclusive offer on ADManager Plus for US and UK regions. Claim now!

The PowerShell Scheduled Job

Let's start by looking at the code I use to setup a PowerShell scheduled job that runs every Friday night 10:00PM. I save the code in a script file so I can recreate the job if I need to.

#create weekly full backups

$trigger = New-JobTrigger -At 10:00PM -DaysOfWeek Friday -WeeksInterval 1 -Weekly

$jobOpt = New-ScheduledJobOption -RunElevated -RequireNetwork -WakeToRun

$params = @{

FilePath = "C:\scripts\WeeklyFullBackup.ps1"

Name = "WeeklyFullBackup"

Trigger = $trigger

ScheduledJobOption = $jobOpt

MaxResultCount = 5

Credential = "$env:computername\jeff"

}

Register-ScheduledJob @params

One thing I want to point out is that instead of defining a scriptblock I'm specifying a file path. This way I can adjust the script all I want without having to recreate or modify the scheduled job. Granted, since I have this in a script file it isn't that difficult but I don't like having to touch something that is working if I don't have to. I'm including a credential because part of my task will include copying the backup archive files to my Synology NAS device.

The Backup Control Script

The script that the scheduled job invokes is pretty straightforward. It has to process the list of directories and backup each folder to a RAR file. I'm using WinRar for my archiving solution. You can substitute your own backup or archiving code. I have some variations I'll save in future articles. Each archive file is then moved to my NAS device. Now that I think of it, I should probably add some code to also send a copy to one of my cloud drives like OneDrive.

Here's the complete script.

#requires -version 5.1

[cmdletbinding(SupportsShouldProcess)]

Param()

If (Test-Path -Path c:\scripts\mybackupPaths.txt) {

#filter out blanks and commented lines

$paths = Get-Content c:\scripts\mybackupPaths.txt | Where-Object { $_ -match "(^[^#]\S*)" -and $_ -notmatch "^\s+$" }

#import my custom module

Import-Module C:\scripts\PSRAR\Dev-PSRar.psm1 -force

$paths | ForEach-Object {

if ($pscmdlet.ShouldProcess($_)) {

Try {

#invoke a control script using my custom module

C:\scripts\RarBackup.ps1 -Path $_ -Verbose -ErrorAction Stop

$ok = $True

}

Catch {

$ok = $False

Write-Warning $_.exception.message

}

}

#clear corresponding incremental log files

$name = ((Split-Path $_ -Leaf).replace(' ', ''))

#specify the directory for the CSV log files

$log = "D:\Backup\{0}-log.csv" -f $name

if ($OK -AND (Test-Path $log) -AND ($pscmdlet.ShouldProcess($log, "Clear Log"))) {

Remove-Item -path $log

}

}

}

else {

Write-Warning "Failed to find c:\scripts\mybackupPaths.txt"

}

The RarBackup.ps1 script is what does the actual archiving and copying to the NAS.

The Backup Script

This file invokes my RAR commands to create the archive and copy it to the NAS. I have code using Get-FileHash to validate the file copy is successful.

#requires -version 5.1

[cmdletbinding(SupportsShouldProcess)]

Param(

[Parameter(Mandatory)]

[ValidateScript( { Test-Path $_ })]

[string]$Path,

[ValidateScript( { Test-Path $_ })]

#my temporary work area with plenty of disk space

[string]$TempPath = "D:\Temp",

[ValidateSet("FULL", "INCREMENTAL")]

[string]$Type = "FULL"

)

Write-Verbose "[$(Get-Date)] Starting $($myinvocation.MyCommand)"

if (-Not (Get-Module Dev-PSRar)) {

Import-Module C:\scripts\PSRAR\Dev-PSRar.psm1 -force

}

#replace spaces in path names

$name = "{0}_{1}-{2}.rar" -f (Get-Date -format "yyyyMMdd"), (Split-Path -Path $Path -Leaf).replace(' ', ''), $Type

$target = Join-Path -Path $TempPath -ChildPath $name

#I have hard coded my NAS backup. Would be better as a parameter with a default value.

$nasPath = Join-Path -Path \\DS416\backup -ChildPath $name

Write-Verbose "[$(Get-Date)] Archiving $path to $target"

if ($pscmdlet.ShouldProcess($Path)) {

#Create the RAR archive -you can use any archiving technique you want

Add-RARContent -path $Path -Archive $target -CompressionLevel 5 -Comment "$Type backup of $(($Path).ToUpper()) from $env:Computername"

Write-Verbose "[$(Get-Date)] Copying $target to $nasPath"

Try {

#copy the RAR file to the NAS for offline storage

Copy-Item -Path $target -Destination $NASPath -ErrorAction Stop

}

Catch {

Write-Warning "Failed to copy $target. $($_.exception.message)"

Throw $_

}

#verify the file was copied successfully

Write-Verbose "[$(Get-Date)] Validating file hash"

$here = Get-FileHash $Target

$there = Get-FileHash $nasPath

if ($here.hash -eq $there.hash) {

#delete the file if the hashes match

Write-Verbose "[$(Get-Date)] Deleting $target"

Remove-Item $target

}

else {

Write-Warning "File hash difference detected."

Throw "File hash difference detected"

}

}

Write-Verbose "[$(Get-Date)] Ending $($myinvocation.MyCommand)"

#end of script file

Structurally, I could have put all of this code into a single PowerShell script. But I like a more modular approach so that I can re-use scripts or commands. Or if I need to revise something I don't have to worry about breaking a monolithic script.

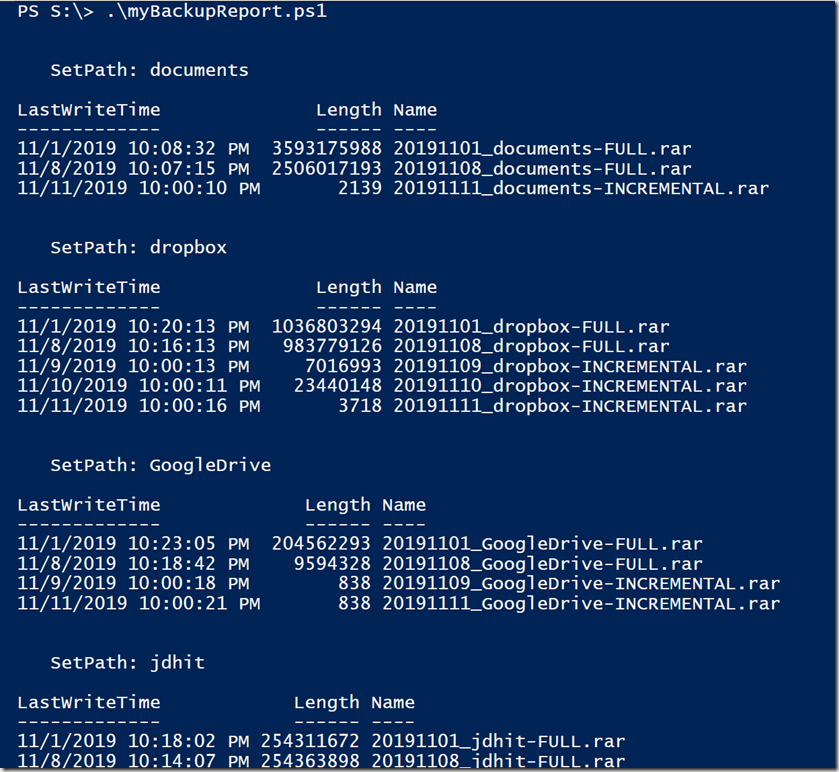

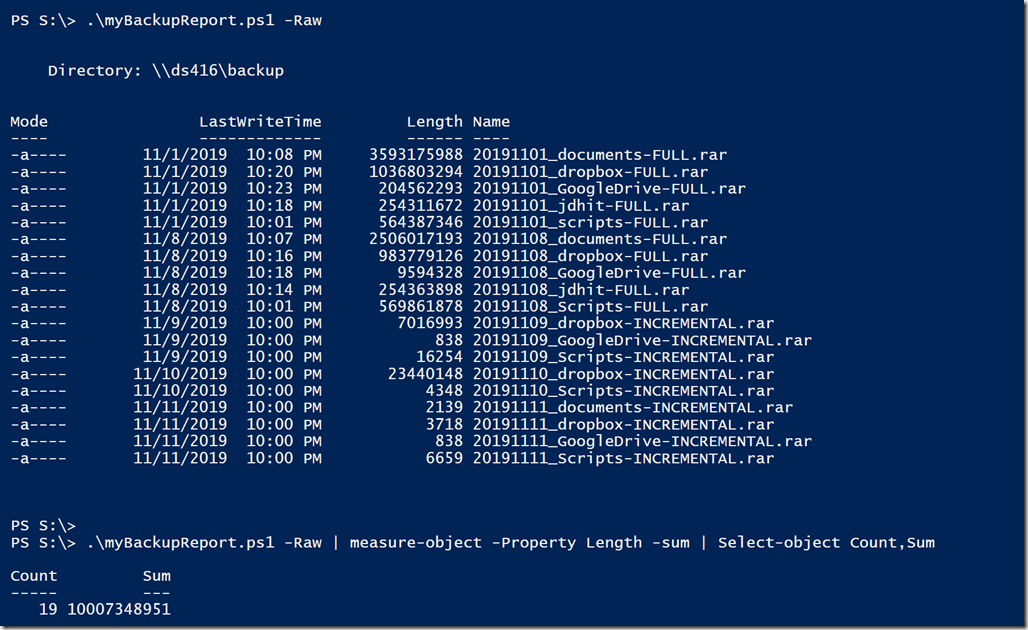

Reporting

The last step I want to share is reporting. I don't worry too much about checking the results of scheduled jobs. All I need to do is check my backup folder for my archive files. Because my backup archives follow a standard naming convention, I can use regular expressions to match on the file name. I use this control script to see my backup archives.

# requires -version 5.1

[cmdletbinding()]

Param(

[Parameter(Position = 0, HelpMessage = "Enter the path with extension of the backup files.")]

[ValidateNotNullOrEmpty()]

[ValidateScript( { Test-Path $_ })]

[string]$Path = "\\ds416\backup",

[Parameter(HelpMessage = "Get backup files only with no formatted output.")]

[Switch]$Raw

)

<#

A regular expression pattern to match on backup file name with named captures

to be used in adding some custom properties. My backup names are like:

20191101_Scripts-FULL.rar

20191107_Scripts-INCREMENTAL.rar

#>

[regex]$rx = "^20\d{6}_(?<set>\w+)-(?<type>\w+)\.rar$"

<#

I am doing so 'pre-filtering' on the file extension and then using the regular

expression filter to fine tune the results

#>

$files = Get-ChildItem -path $Path -filter *.rar | Where-Object { $rx.IsMatch($_.name) }

#add some custom properties to be used with formatted results based on named captures

foreach ($item in $files) {

$setpath = $rx.matches($item.name).groups[1].value

$settype = $rx.matches($item.name).groups[2].value

$item | Add-Member -MemberType NoteProperty -Name SetPath -Value $setpath

$item | Add-Member -MemberType NoteProperty -Name SetType -Value $setType

}

if ($raw) {

$Files

}

else {

$files | Sort-Object SetPath, SetType, LastWriteTime | Format-Table -GroupBy SetPath -Property LastWriteTime, Length, Name

}

The default behavior is to show formatted output.

Normally, you would never want to include Format cmdlets in your code. But in this case, I am using a script to give me a result I want without having to do a lot of typing. That's why this is a script and not a function. With this in mind, the control script also has a -Raw parameter which writes the file objects to the pipeline.

My weekly backup script deletes the incremental files. For now, I will manually remove older full backups. Although I could add code to only keep the last 5 full backups.

This system is still new and I'm constantly tweaking and adding new tools. I don't feel that I can turn this into a complete, standalone PowerShell module given that I have written my code for me and my network. But there should be enough of my code that you can utilize to create your own solution. I have some other backup ideas and techniques I'll share with you another time. In the mean time, I'd love to hear what you think about all of this.

Hi Jeff, you do amazing works 🙂 , it would be nice if we can get this backup solution in a repositorie, to get easily all the solution and further updates.

Thanks in advance.

The code is very specific to me, but I can see some value. I’ll see what I can do and pushing them to a GitHub repo.

The backup material is available at https://github.com/jdhitsolutions/PSBackup

Thanks! Really great work

Thanks for your time Jeffrey, I keep you in touch with my version :).